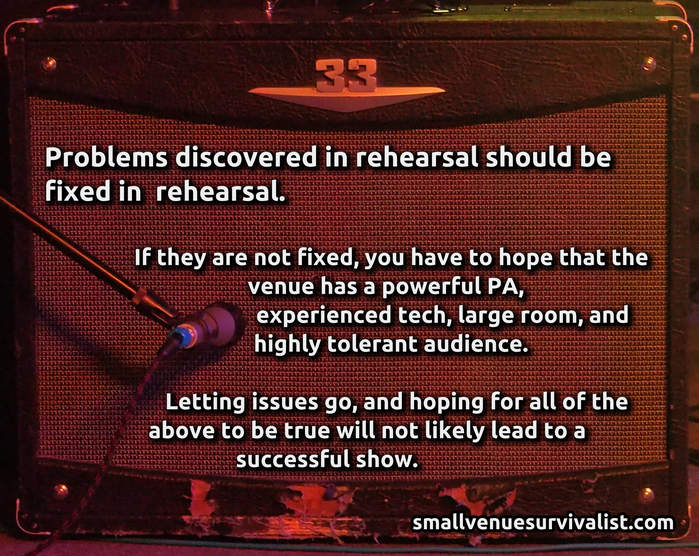

If you beat the dynamics out of a band that manages itself with dynamics, well…

Please Remember:

The opinions expressed are mine only. These opinions do not necessarily reflect anybody else’s opinions. I do not own, operate, manage, or represent any band, venue, or company that I talk about, unless explicitly noted.

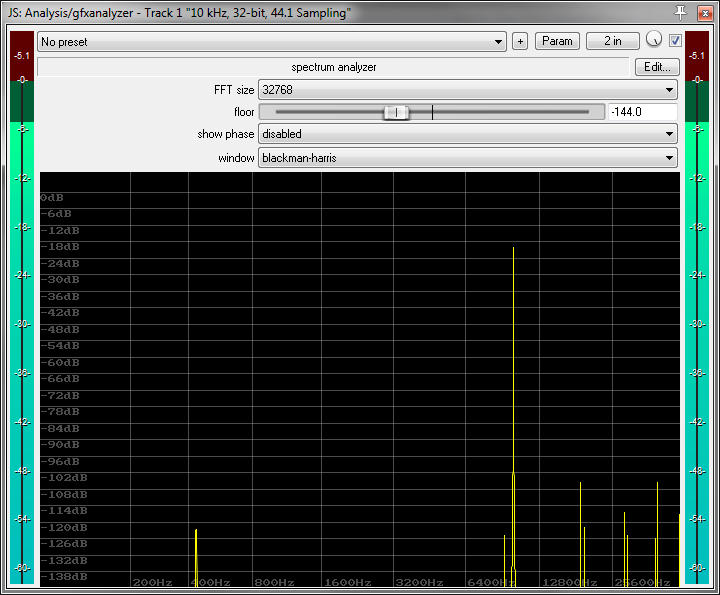

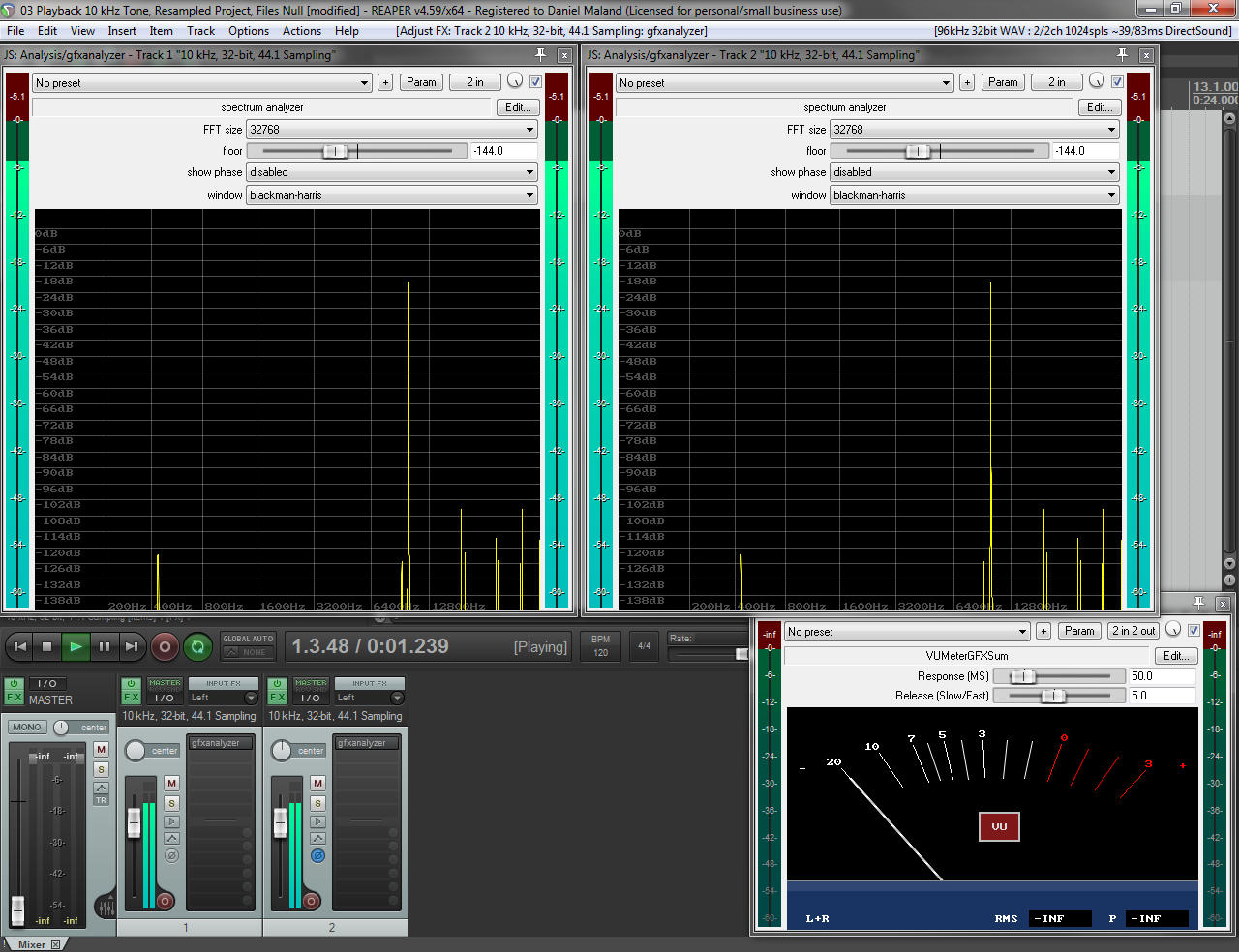

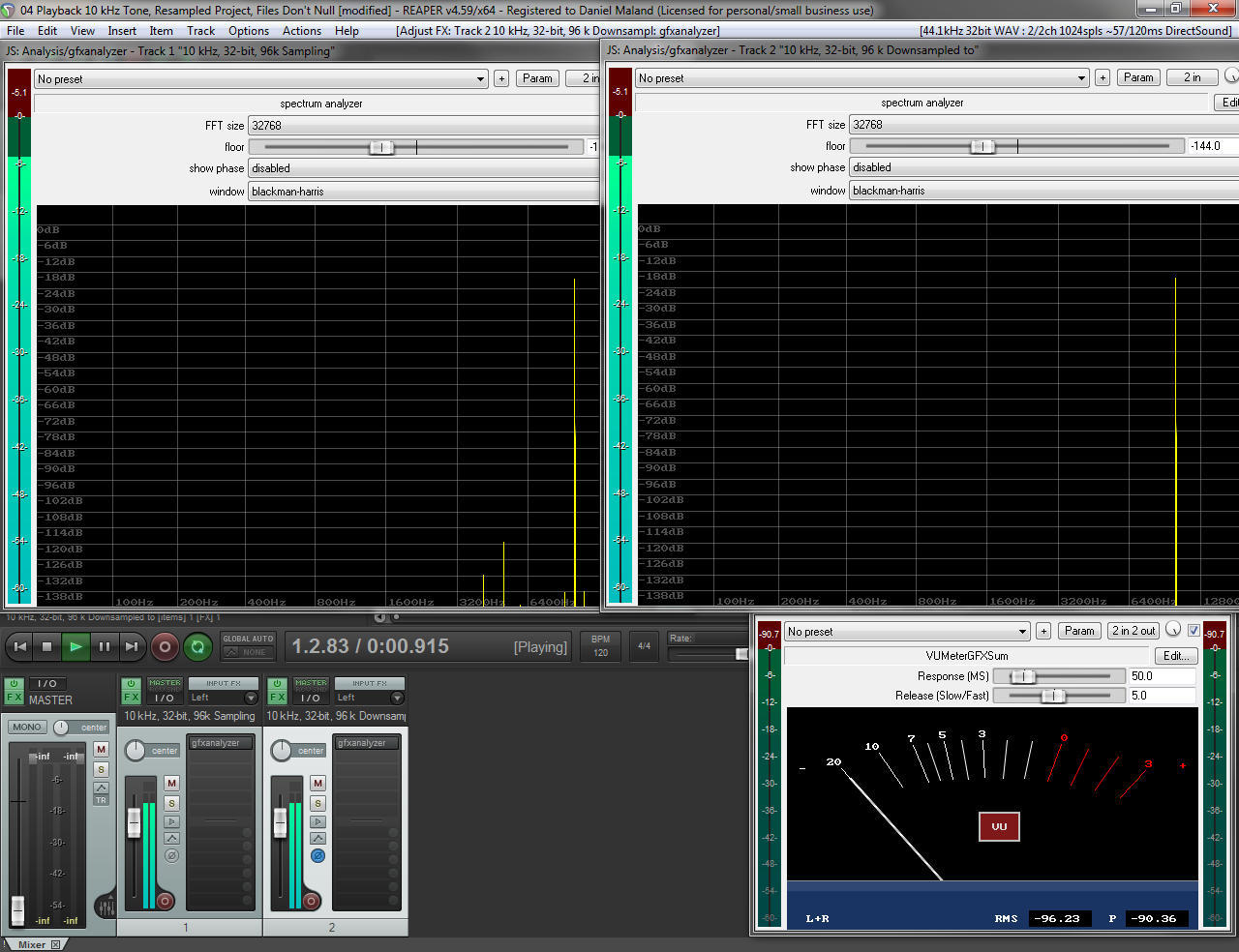

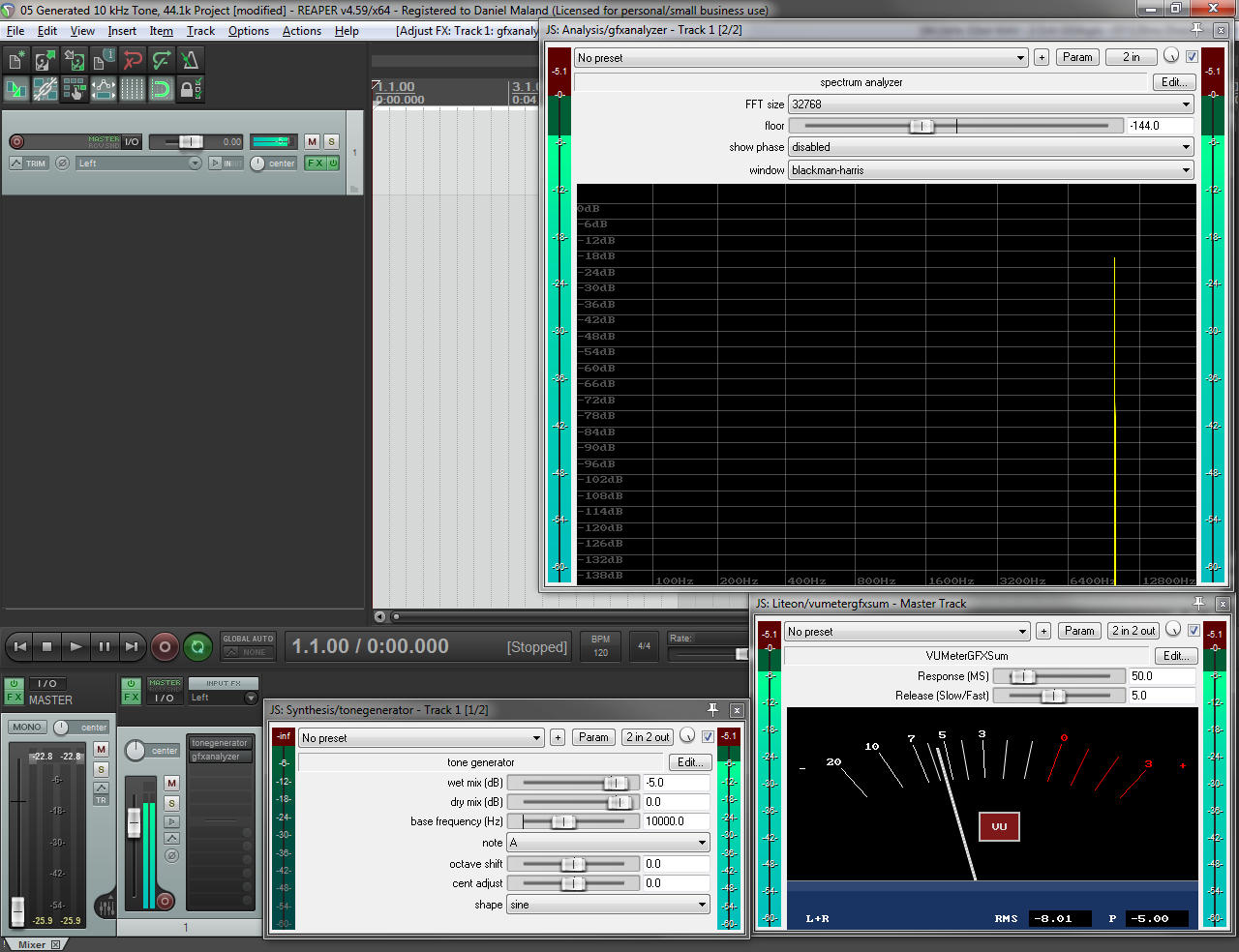

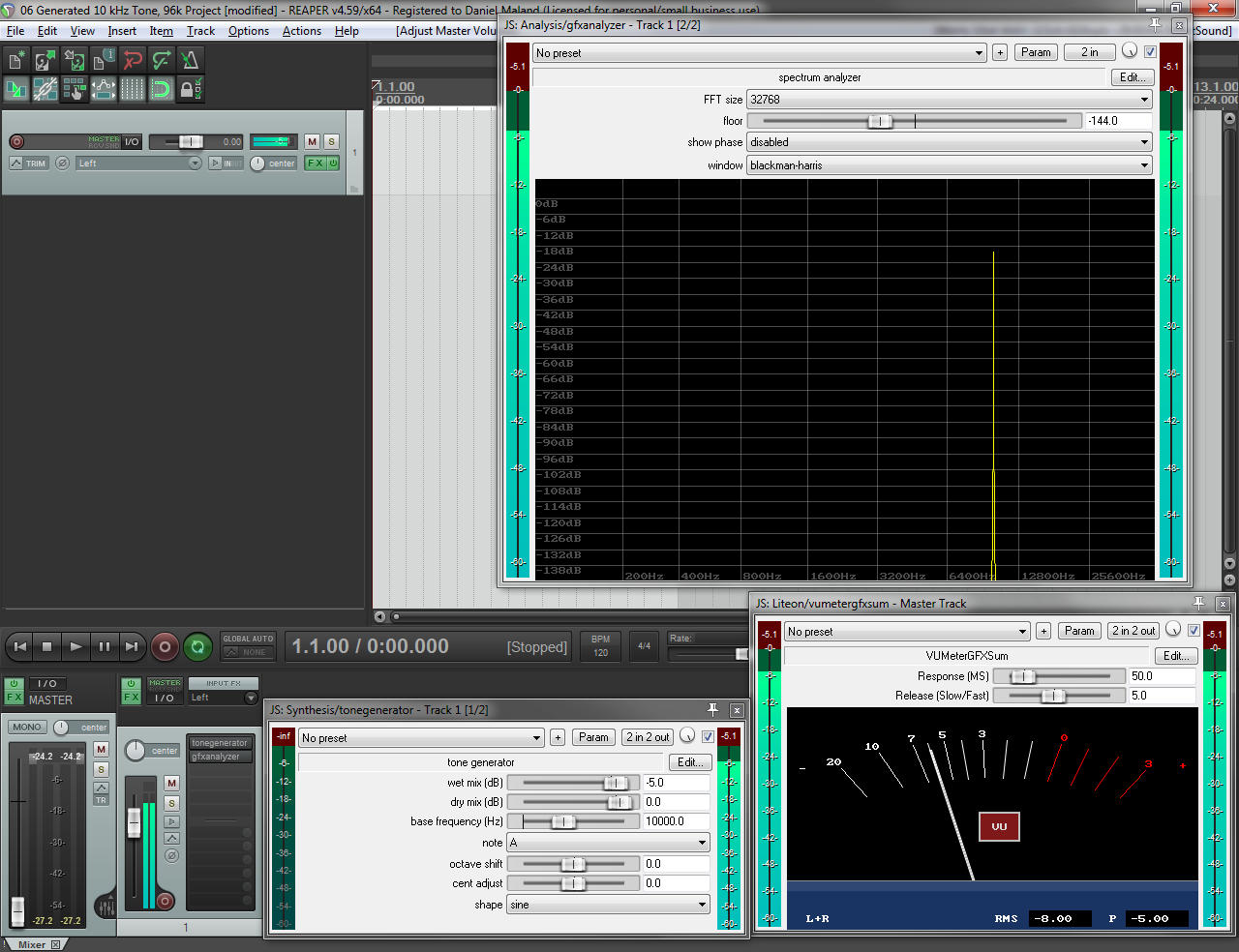

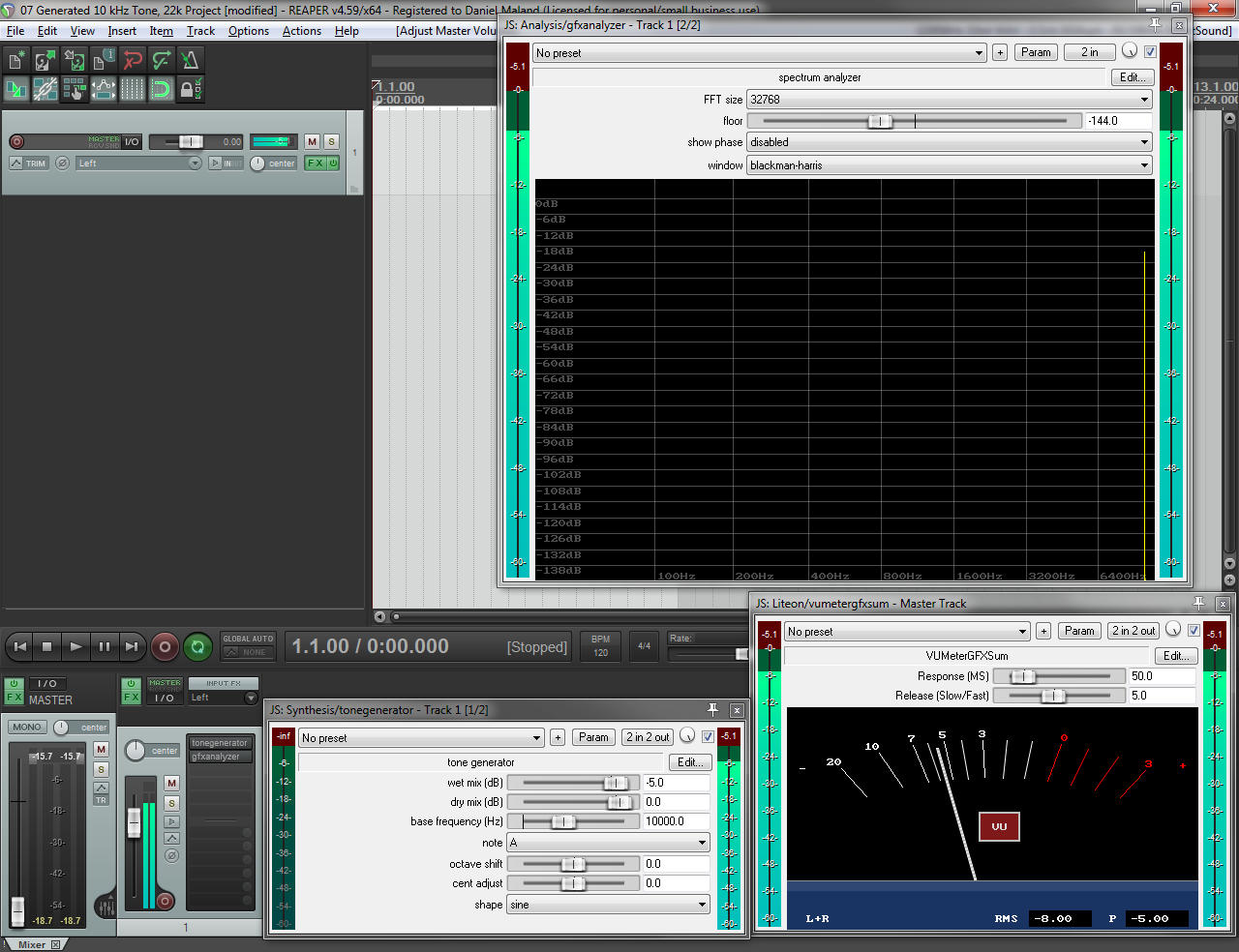

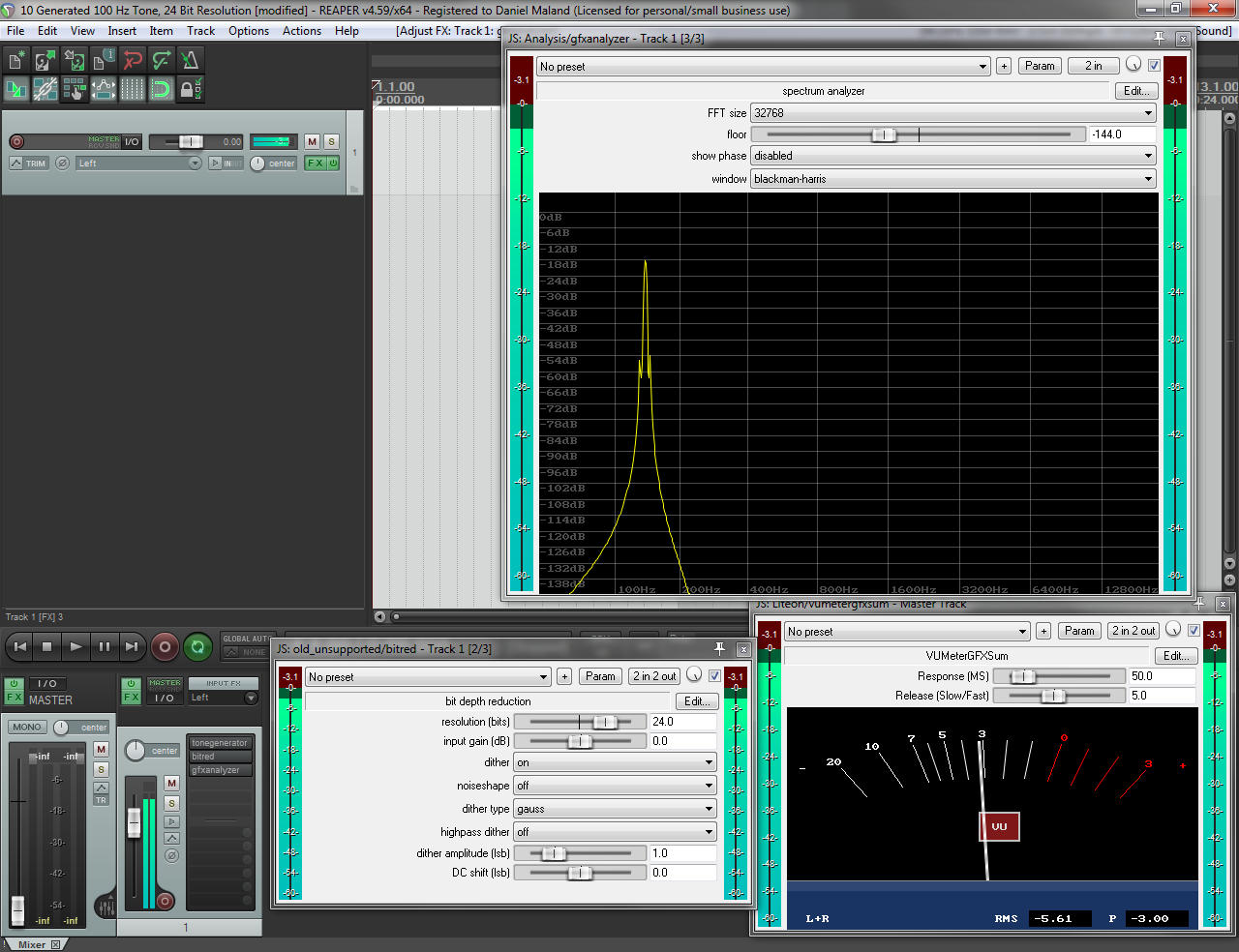

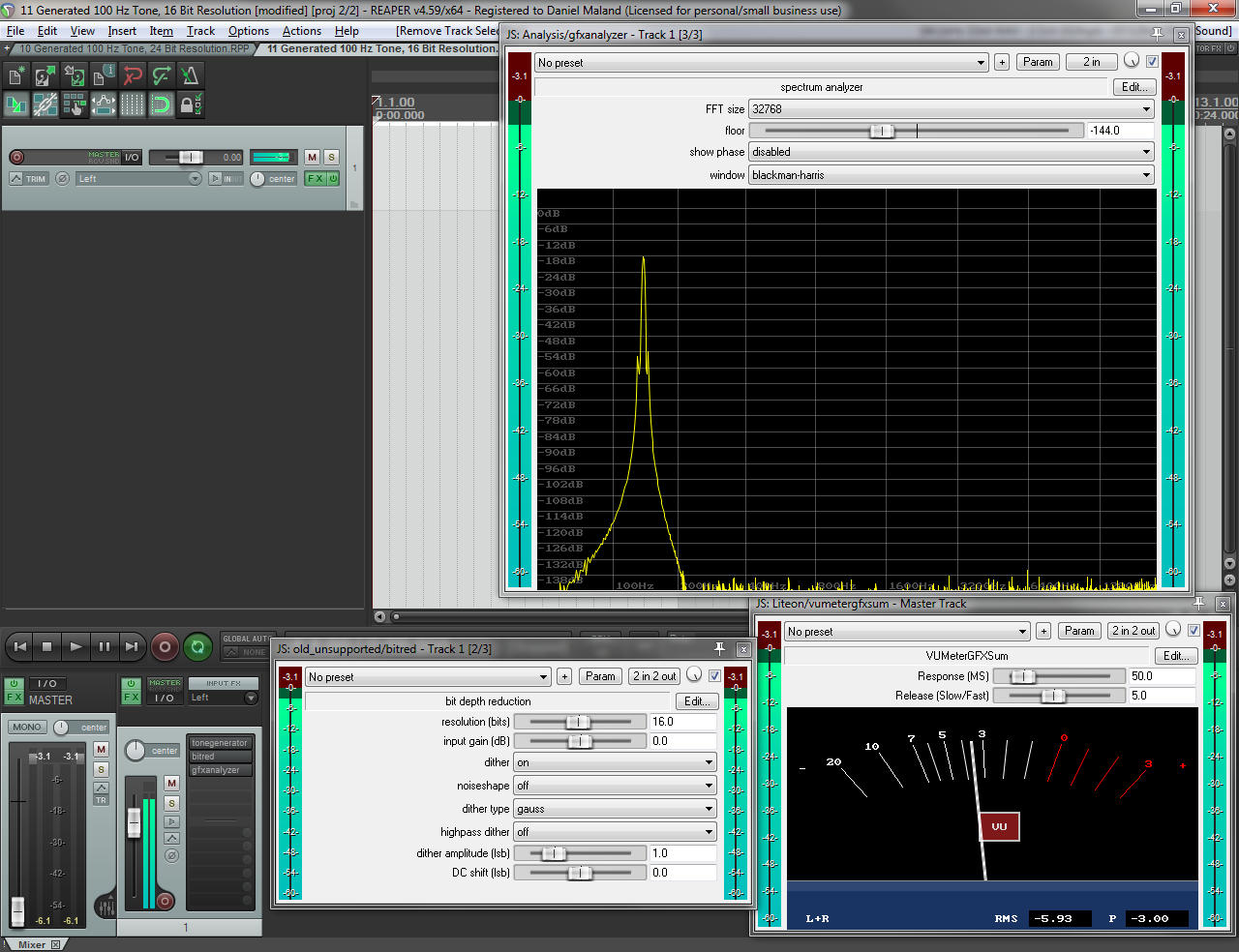

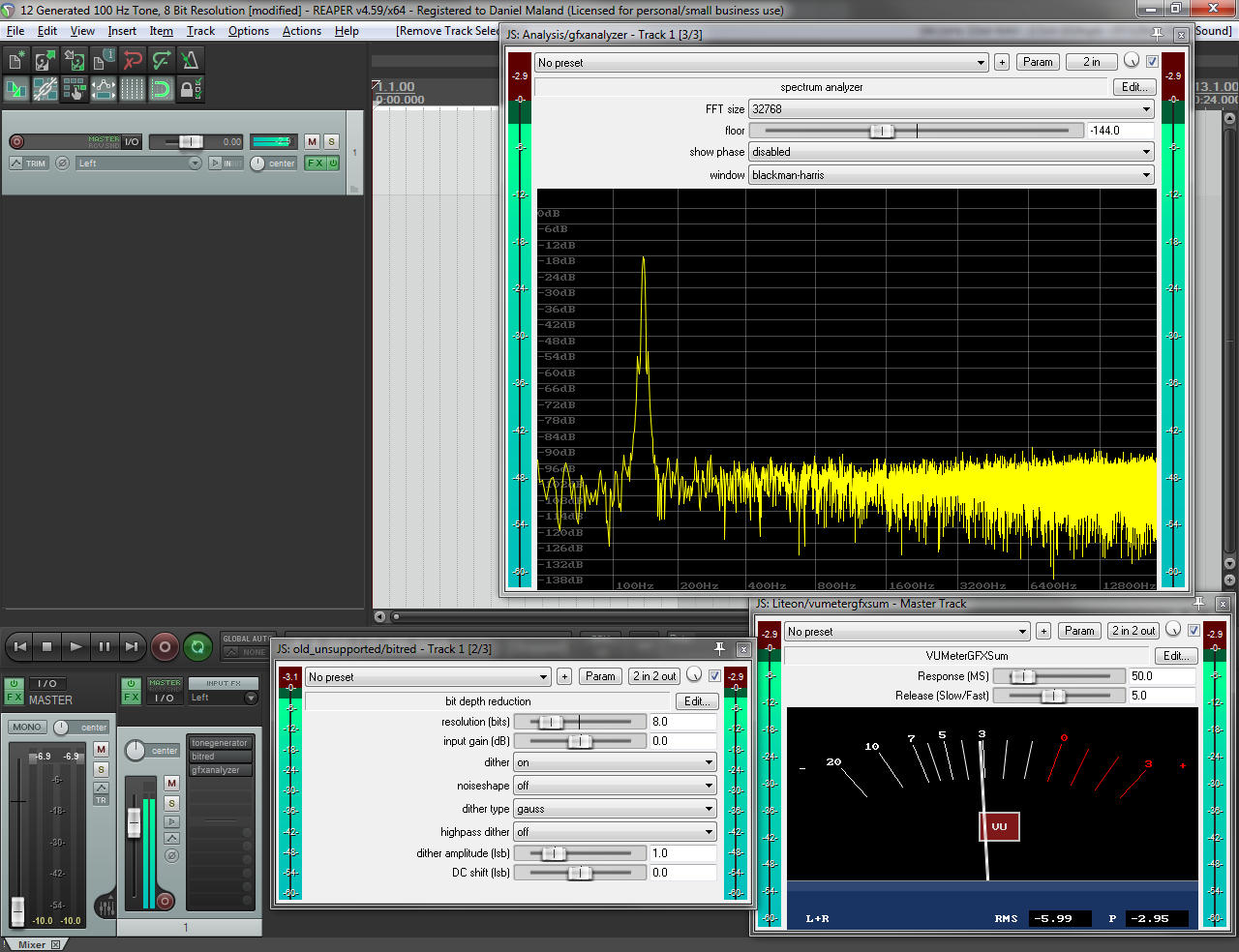

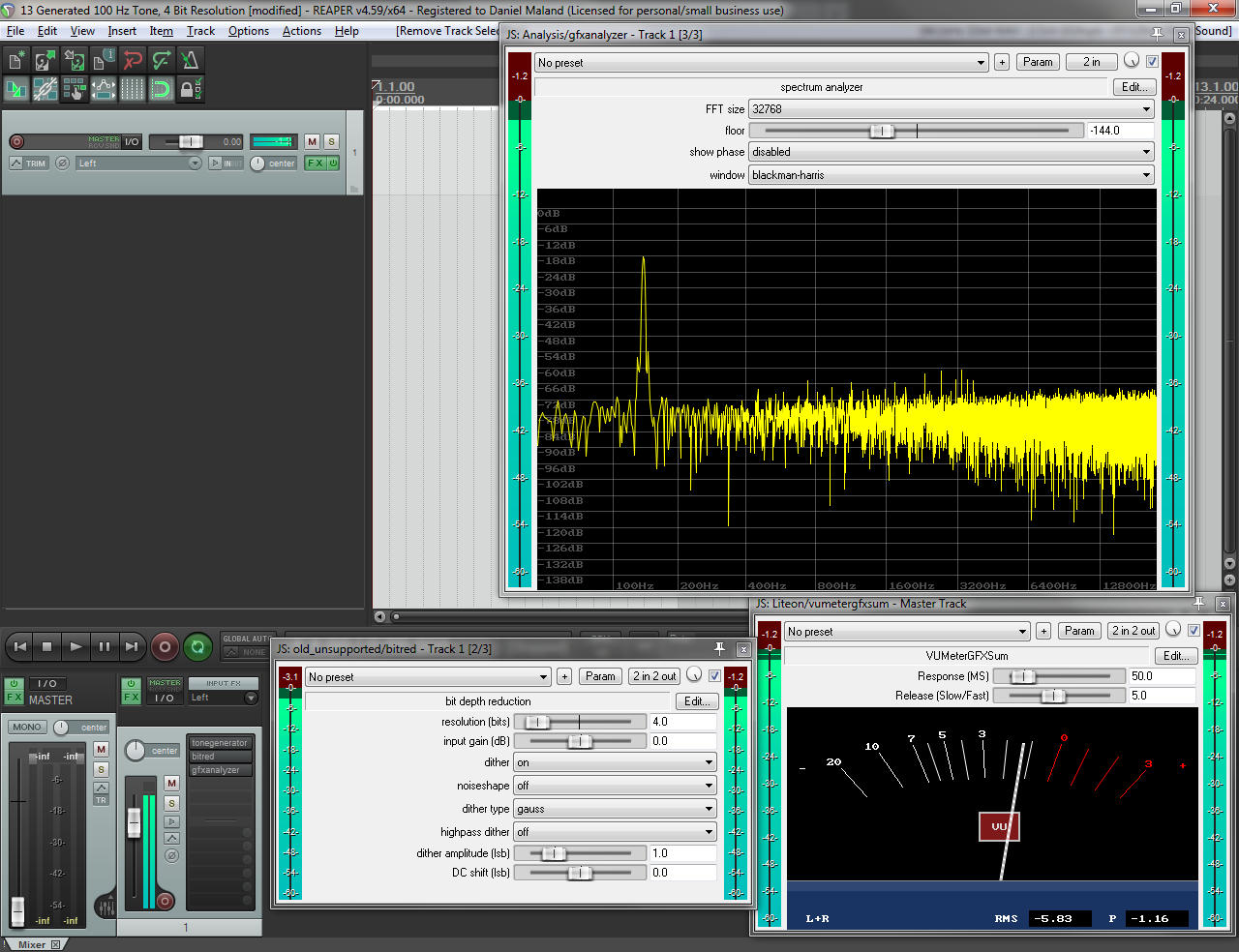

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.Just recently, I had the privilege of working on “The Last Floyd Show.” (The production provided the backdrop for that whole bit about the lighting upgrade that took forever.) We recorded the show to multitrack, and I was tasked with getting a mix done.

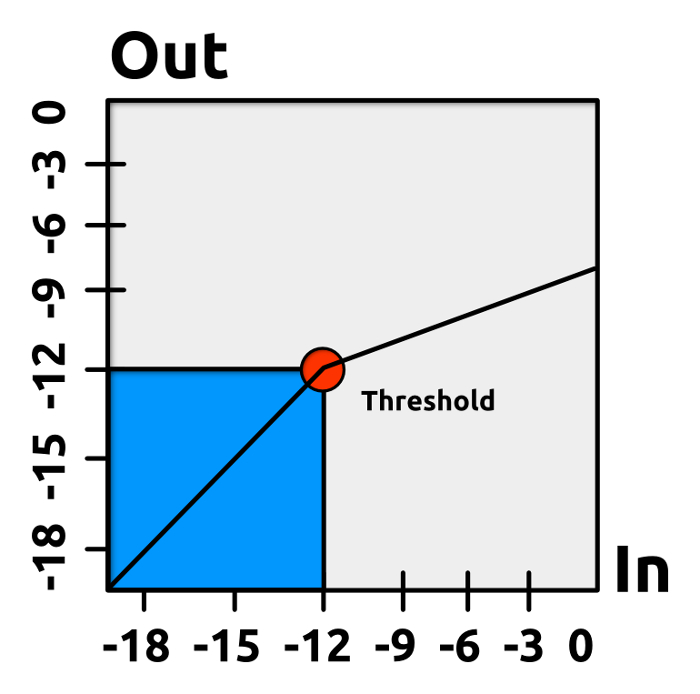

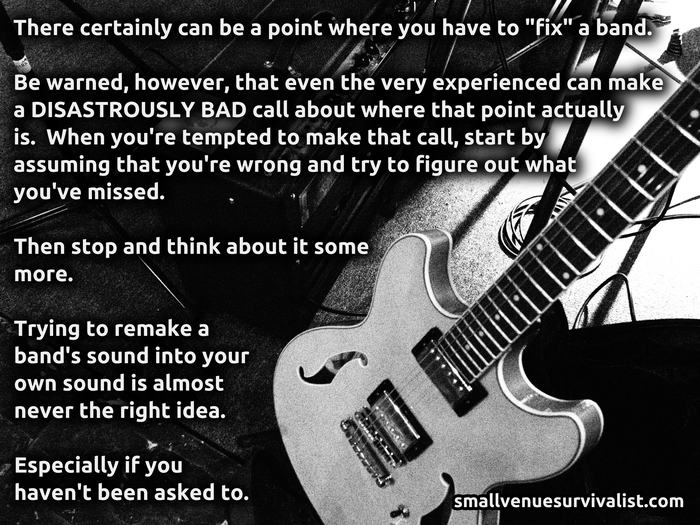

It was one of the toughest mixdowns I’ve attempted, mostly because I took the wrong approach when I got started. I attempted a “typical rock band” mix, and I ended up having to basically start over once…and then backtrack significantly twice more. Things started to work much more nicely when I backed WAY off on my channel compression – which is a little weird, because a lot of my “mix from live” projects actually do well with aggressive compression on individual channels. You grab the player’s level, hold it back from jumping around, fit ’em into the part of the spectrum that works, and everything’s groovy.

Not this time, though.

Because Pink Floyd is actually an old-timey bluegrass act that inhabits a space-rock body. They use “full-range” tones and dynamics extensively, which means that preventing those things from working is likely to wreck the band’s sound.

General Dynamics (Specific Dynamics, Too)

Not every Floyd tune is the same, of course, but take a listen over a range of their material and you’ll discover something: Pink Floyd gets a huge amount of artistic impact from big swings in overall dynamics, as well as the relative levels of individual players. Songs build into rolling, thunderous choruses, and then contract into gentle verses. There are “stings” where a crunchy guitar chord PUNCHES YOU IN THE FACE, and then backs away into clean, staccato notes. Different parts ebb and flow around each other, with great, full-range tones possible across multiple instruments – all because of dynamics. When it’s time for the synth, or organ, or guitar to be in the lead, that’s what is in the lead. They just go right past the other guys and “fill the space,” which is greatly enabled by the other guys dropping far into the background.

If you crush the dynamics out of any part of a Pink Floyd production, it isn’t Pink Floyd anymore. It’s people playing the same notes as Floyd songs without actually making those songs happen. If those dynamic swings are prevented, the arrangements stop working properly. The whole shebang becomes a tangled mess of sounds running into each other, which is EXACTLY what happened to me when I tried to “rock mix” our Floyd Show recording.

This usage of dynamics, especially as a self-mix tool, is something that you mostly see in “old school acoustic-music” settings. Rock and pop acts these days are more about a “frequency domain” approach than a “volume domain” sort of technique. It’s not that there’s no use of volume at all, it’s just that the overwhelming emphasis seems to be on everybody finding a piece of the spectrum, and then just banging away with the vocals on top. (I’m not necessarily complaining. This can be very fun when it’s done well.) With that emphasis being the case so often, it’s easy to get suckered into doing everything with a “rock” technique. Use that technique in the wrong place, though, and you’ll be in trouble.

And yes, this definitely applies to live audio. In fact, this tendency to work on everything with modern rock tools is probably why I haven’t always enjoyed Floyd Show productions as much as I’ve wanted.

In The Flesh

When you have a band like Floyd Show on the deck, in real life, in a small room, the band’s acoustical peaks can overrun the PA to some extent. This is especially true if (like me), you aggressively limit the PA in order to keep the band “in a manageable box.” This, coupled with the fact that the band’s stage volume is an enormous contributor to the sound that the audience hears, means that a compressed, “rock band” mix isn’t quite as ruinous as it otherwise would be. That is, with the recording, the only sound you can hear is the reproduced sound, so screwing up the production is fatal. Live, in a small venue, you hear a good bit of reproduction (the PA) and a LOT of stage volume. The stage volume counteracts some of the “reproduction” mistakes, and makes the issues less obvious.

Another thing that suppresses “not quite appropriate” production is that you’re prepared to run an effectively automated mix in real time. When you hear that a part isn’t coming forward enough, you get on the appropriate fader and give it a push. Effectively, you put some of the dynamic swing back in as needed, which masks the mistakes made in the “steady state” mix setup. With the recording, though, the mix doesn’t start out as being automated – and that makes a fundamental “steady state” error stand out.

As I said before, I haven’t always had as much fun with Floyd Show gigs as I’ve desired. It’s not that the shows weren’t a blast, because they were definitely enjoyable for me, it’s just that they could have been better.

And it was because I was chasing myself into a corner as much as anyone else was, all by taking an approach to the mix that wasn’t truly appropriate for the music. I didn’t notice, though, because my errors were partially masked by virtue of the gigs happening in a small space. (That masking being a Not Bad Thing At All.™)

The Writing On The Wall

So…what can be generalized from all this? Well, you can boil this down to a couple of handy rules for live (and studio) production:

If you want to use “full bandwidth” tones for all of the parts in a production, then separation between the parts will have to be achieved primarily in the volume and note-choice domain.

If you’re working with a band that primarily achieves separation by way of the volume domain, then you should refrain from restricting the “width” of the volume domain any more than is necessary.

The first rule comes about because “full bandwidth” tones allow each part to potentially obscure each other part. For example, if a Pink Floyd organ sound can occupy the same frequency space as the bass guitar, then the organ either needs to be flat-out quieter or louder at the appropriate times to avoid clashing with the bass, or change its note choices. Notes played high enough will have fundamental frequencies that are away from the bass guitar’s fundamentals. This gives the separation that would otherwise be gotten by restricting the frequency range of the organ with EQ and/ or tone controls. (Of course, working the equalization AND note choice AND volume angles can make for some very powerful separation indeed.)

The second rule is really just an extension of “getting out of the freakin’ way.” If the band is trying to be one thing, and the production is trying to force the band to be something else, the end result isn’t going to be as great as it could be. The production, however well intentioned, gets in the way of the band being itself. That sounds like an undesirable thing, because it is an undesirable thing.

Faithfully rendered Pink Floyd tunes use instruments with wide-ranging tones that run up and down – very significantly – in volume. These volume swings put different parts in the right places at the right time, and create the dramatic flourishes that make Pink Floyd what it is. Floyd is certainly a rock band. The approach is not exactly the same as an old-school bluegrass group playing around a single, omni mic…

…but it’s close enough that I’m willing to say: The lunatics on Pink Floyd’s grass are lying upon turf that’s rather more blue than one might think at first.