Purpose And Explanation

Ever since The Small Venue Survivalist became a reality, I have wanted to do a big experiment. I’ve been itching to round up a bunch of microphones that can be purchased for either below, or slightly above the price point of the SM58, and then to objectively compare them to an SM58. (The Shure SM58 continues to be an industry standard microphone that is recognized and accepted everywhere as a sound-reinforcement tool.)

The key word above is “objectively.” Finding subjective microphone comparisons isn’t too hard. Sweetwater just put together (in 2017) a massive studio-mic shootout, and it was subjective. That is, the measurement data is audio files that you must listen to. This isn’t a bad thing, and it makes sense for studio mics – what matters most is how the mic sounds to you. Listening tests are everywhere, and they have their place.

In live audio, though, the mic’s sound is only one factor amongst many important variables. Further, these variables can be quantified. Resistance to mechanically-induced noise can be expressed as a decibel number. So can resistance to wind noise. So can feedback rejection. Knowing how different transducers stack up to one another is critical for making good purchasing decisions, and yet this kind of quantitative information just doesn’t seem to be available.

So, it seems that some attempt at compiling such measurements might be helpful.

Planned Experimental Procedure

Measure Proximity Effect

1) Generate a 100Hz tone through a loudspeaker at a repeatable SPL.

2) Place the microphone such that it is pointed directly at the center of the driver producing the tone. The front of the grill should be 6 inches from the loudspeaker baffle.

3) Establish an input level from the microphone, and note the value.

4) Without changing the orientation of the microphone relative to the driver, move the microphone to a point where the front of the grill is 1 inch from the loudspeaker baffle.

5) Note the difference in the input level, relative to the level obtained in step 3.

Assumptions: Microphones with greater resistance to proximity effect will exhibit a smaller level differential. Greater proximity effect resistance is considered desirable.

Establish “Equivalent Gain” For Further Testing

1) Place a monitor loudspeaker on the floor, and position the microphone on a tripod stand. The stand leg nearest the monitor should be at a repeatable distance, at least 1 foot from the monitor enclosure.

2) Set the height of the microphone stand to a repeatable position that would be appropriate for an average-height performer.

3) Changing the height of the microphone as little as possible, point the microphone directly at the center of the monitor.

4) Generate pink-noise through the monitor at a repeatable SPL.

5) Using a meter capable of RMS averaging, establish a -40 dBFS RMS input level.

Measure Mechanical Noise Susceptibility

1) Set the microphone such that it is parallel to the floor.

2) Directly above the point where the microphone grill meets the body, hold a solid, semi-rigid object (like an eraser, or small rubber ball) at a repeatable distance at least 1 inch over the mic.

3) Allow the object to fall and strike the microphone.

4) Note the peak input level created by the strike.

Assumptions: Microphones with greater resistance to mechanically induced noise will exhibit a lower input level. Greater resistance to mechanically induced noise is considered desirable.

Measure Wind Noise Susceptibility

1) Position the microphone on the stand such that it is parallel to the floor.

2) Place a small fan (or other source of airflow which has repeatable windspeed and air displacement volume) 6 inches from the mic’s grill.

3) Activate the fan for 10 seconds. Note the peak input level created.

Assumptions: Microphones with greater resistance to wind noise will exhibit a lower input level. Greater resistance to wind noise is considered desirable.

Measure Feedback Resistance

1) Set the microphone in a working position. For cardioid mics, the rear of the microphone should be pointed directly at the monitor. For supercardioid and hypercardioid mics, the the microphone should be parallel with the floor.

2a) SM58 ONLY: Set a send level to the monitor that is just below noticeable ringing/ feedback.

2b) Use the send level determined in 2a to create loop-gain for the microphone.

3) Set a delay of 1000ms to the monitor.

4) Begin a recording of the mic’s output.

5) Generate a 500ms burst of pink-noise through the monitor. Allow the delayed feedback loop to sound several times.

6) Stop the recording, and make note of the peak level of the first repeat of the loop.

Assumptions: Microphones with greater feedback resistance will exhibit a lower input level on the first repeat. Greater feedback resistance is considered desirable.

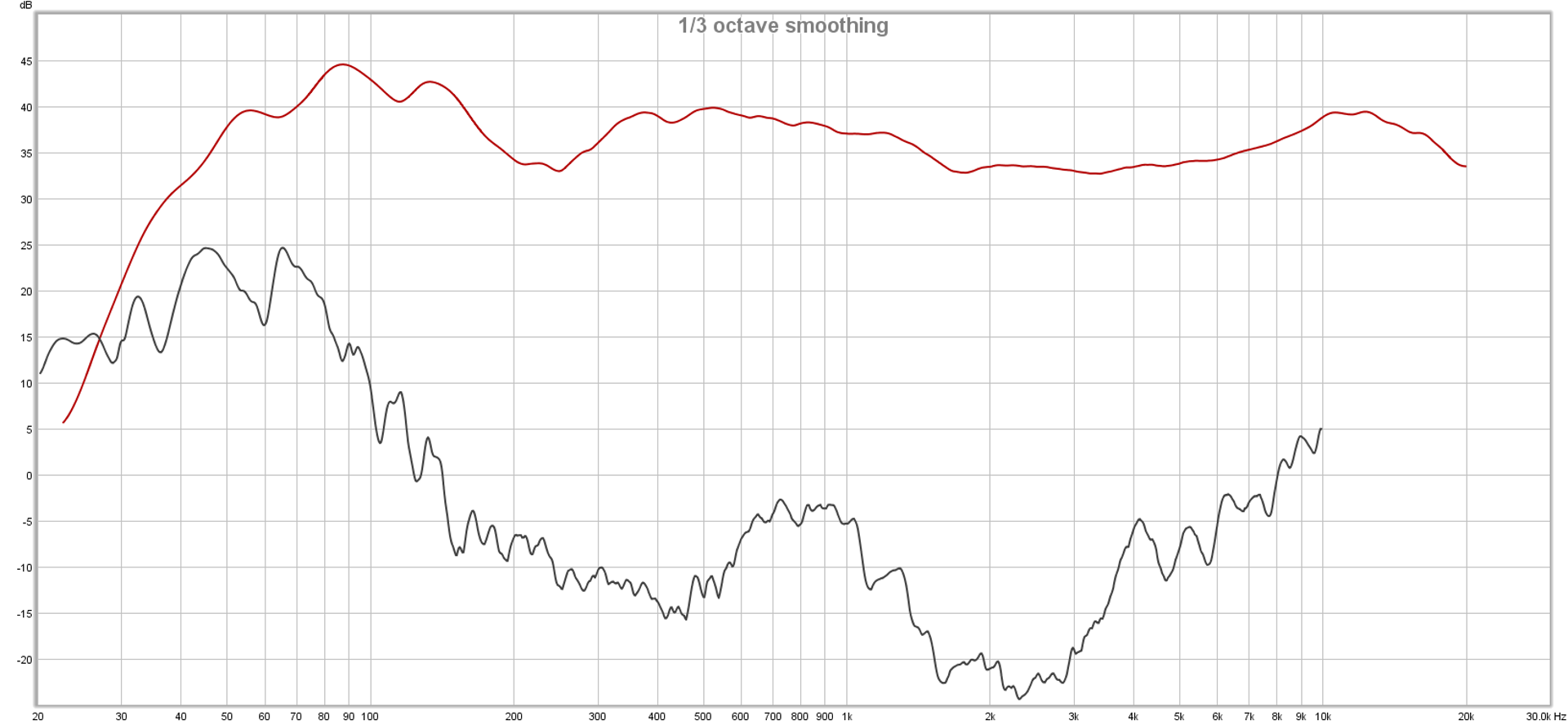

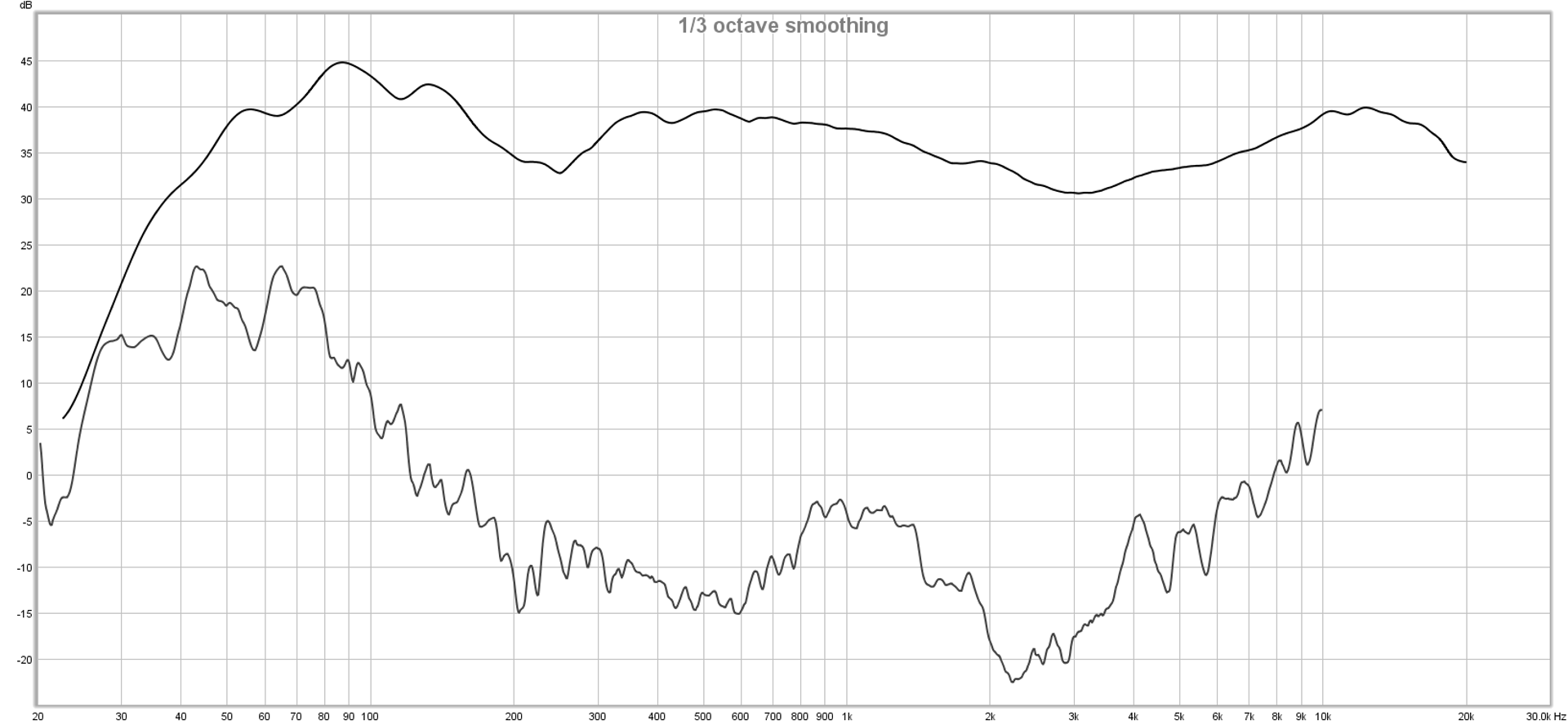

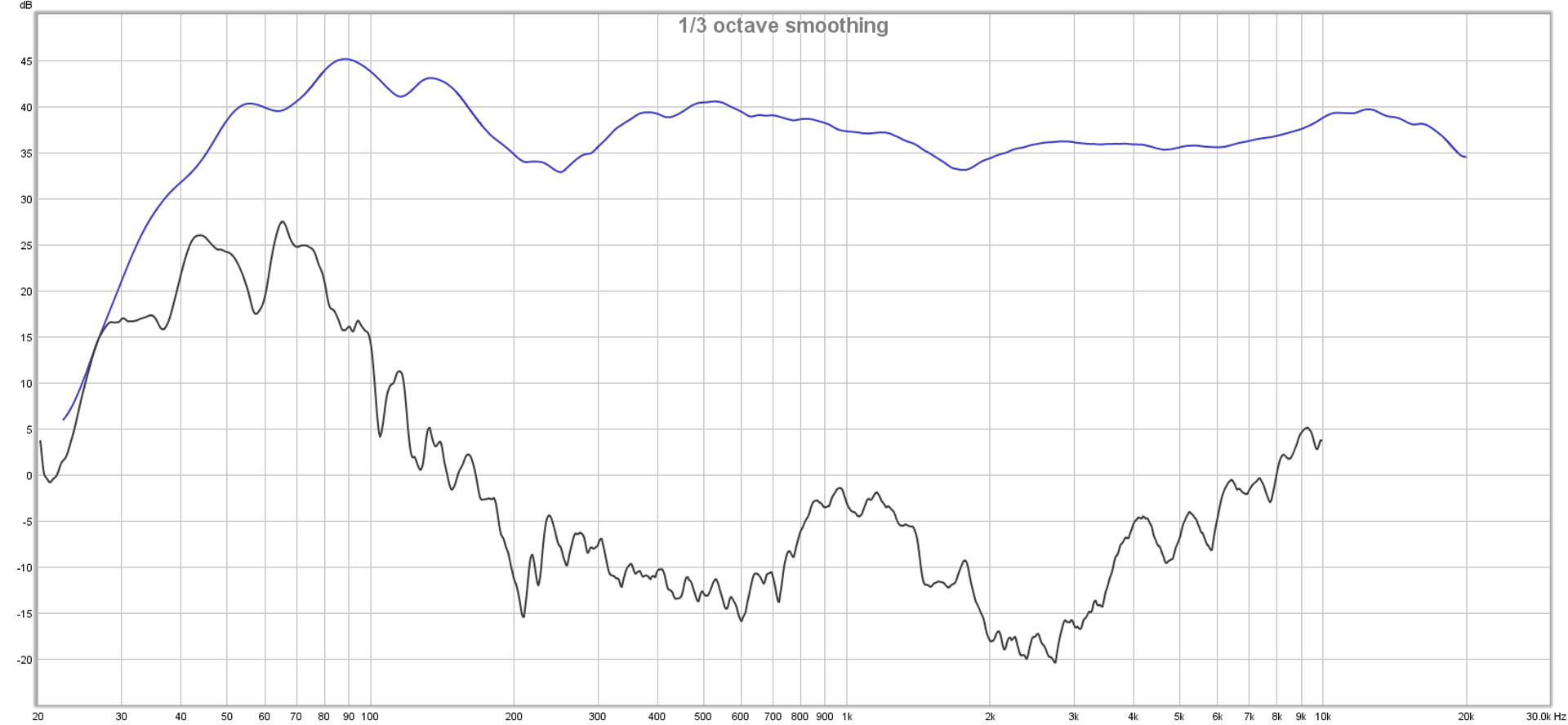

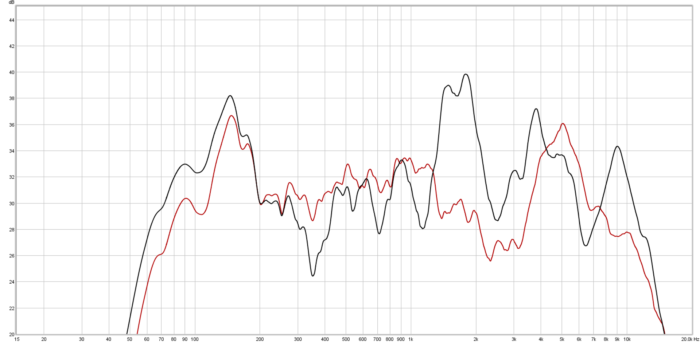

Measure Cupping Resistance

1) Mute the send from the microphone to the monitor.

2) Obtain a frequency magnitude measurement of the microphone in the working position, using the monitor as the test audio source.

3) Place a hand around as much of the mic’s windscreen as is possible.

4) Re-run the frequency magnitude measurement.

5) On the “cupped” measurement, note the difference between the highest response peak, and that frequency’s level on the normal measurement.

Assumptions: Microphones with greater cupping resistance will exhibit a smaller level differential between the highest peak of the cupped response and that frequency’s magnitude on the normal trace. Greater cupping resistance is considered desirable.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.