One of my favorite lines from the movie “The Matrix” is the bit delivered by The Oracle after Neo accidentally knocks over a vase:

“What’ll really bake your noodle later on is, would you still have broken it if I hadn’t said anything?”

That’s where I get the whole “noddle baking” thing. It’s stuff that really messes with your mind when you consider the implications. Of course, our noodles don’t get baked as often as they used to. We’re a pretty sophisticated lot, not at all astounded by ideas like how looking up at the stars is actually looking back in time. We’re just used to it all. “Yeah, yeah, the light from the stars has been traveling to us for millions of years, we’re seeing the universe as it was before our civilization was really a thing, yadda, yadda…”

Even so, there’s still plenty of room for us audio types to have our minds sauteed – probably because the physics of audio is so accessible to us. Really messing around with light waves is tough, but all kinds of nerdery is possible when it comes to sound. Further, the implications of our messing about with audio are surprisingly weird, whacky, wonderful, and even downright bizarre.

Here, let me demonstrate.

Time And Distance Are Partially Interchangeable

Pretty much every audio human who gets into “the science” can quote you the rule of thumb: Sound pressure waves propagate away from their source at roughly 1 foot/ millisecond, or 1 meter/ 3 milliseconds. (Notice that I said “roughly.” Sound is actually a little bit faster than that, but the error is small enough to be acceptable in cases where inches or centimeters aren’t of concern.) In a way that’s very similar to light, time and distance can be effectively the same measurement. When you hear a loudspeaker that’s 20 feet away, you aren’t actually hearing what the box sounds like now. You’re hearing what the box sounded like 20 milliseconds ago.

Now, we tend to gloss over all that. It’s just science that you get used to remembering. Think about what it means, though: To an extent, you can move a loudspeaker stack relative to the listener without physically changing the position of the boxes. Assuming that other sound sources (which the brain can use for a timing reference) stay the same, adding delay time to a source effectively makes the source farther away to the observer.

…and yet, the physical location of the source hasn’t changed. When you think about it, that’s pretty wild. What’s even wilder is that the loudspeaker’s coverage and acoustical interaction with the room remain unchanged, even though the loudspeaker is now effectively further away. Think about it: If we had physically moved the loudspeaker away from the listener(s), more people would be in the coverage pattern. The coverage area expands with distance, but only when the distance is physical. Similarly, if we had moved the loudspeaker away by actually picking it up and placing it farther away, the ratio of direct sound to reverberant sound would have changed. The reverberant field’s SPL (Sound Pressure Level) would have been higher, relative to the SPL of the pressure wave traveling directly to the listeners. By using a delay line, though, the SPL of the sound that arrives directly is unchanged…even though the sound is effectively farther from the audience.

Using a digital delay line, we can sort of “bend the rules” concerning what happens when the speakers are farther away from the audience.

Whoa.

It’s important to note, of course, that the rules are only “bent.” Also, they’re only bent in terms of select portions of how humans perceive sound. Whether or not the loudspeakers are physically moved or simply moved in time, the acoustical interactions with other sound sources DO change. This can be good or bad. Also, a loudspeaker that’s farther from a listener should have lower apparent SPL than the same box with the same power at a closer distance.

But that’s not what happens with a “unity gain” delay line.

And that’s another noodle-baker. The loudspeaker is perceptually farther from the listener, yet it has the same SPL as a nearby source.

Whoa.

(There is no spoon. It is yourself that bends.)

The Strange Case Of Delay And The Reference Frame

That bit above is nifty, but it’s actually pretty basic.

You want something really wild?

When we physically move a loudspeaker, we are most likely reducing its distance to some listeners while increasing its distance to others. (Obvious, right?) However, when we move a loudspeaker using time delay, the loudspeaker’s apparent distance to ALL listeners is increased. No matter where the listener is, the loudspeaker is pushed AWAY from them. It’s like the loudspeaker is in a bubble of space-time that is more distant from all points outside the bubble. Your frame of reference doesn’t matter. The delayed sound source always seems to be more distant, no matter where you’re standing.

Now THERE’S some Star Trek for ya.

If you’re not quite getting it, do this thought experiment: You put a monitor wedge on the floor. You and a friend stand thirty feet apart, with the monitor wedge halfway between the two of you. The distance from each listener to the wedge is 15 feet, right? Now, a delay of 15 ms is applied to the signal feeding the wedge. Remember, both of you are listening to the same wedge. Thus, in the sense of time (that is, ignoring other factors like SPL and direct/ reverberant ratio) each of you perceives the wedge as having moved to where the other person is standing. This is because, again, both of you are hearing the same signal in the same wedge. Both of you are registering a sonic event that has been made 15 ms “late.” It doesn’t matter where one of you is listening from – the wedge always “moves” away from you.

(Cue the theme to “The Twilight Zone.”)

Let me re-emphasize that other, important cues as to the loudspeaker’s location are not present. If you try this experiment in real life, you won’t truly experience the sound of a wedge that’s been moved 15 feet away. The acoustic interaction and SPL factors simply won’t be present. What we’re talking about here is the time component only, divorced from other factors.

You can use this “delay lines let you put your loudspeakers in a weird pocket-dimension” behavior to do cool things like directional subwoofer arrays.

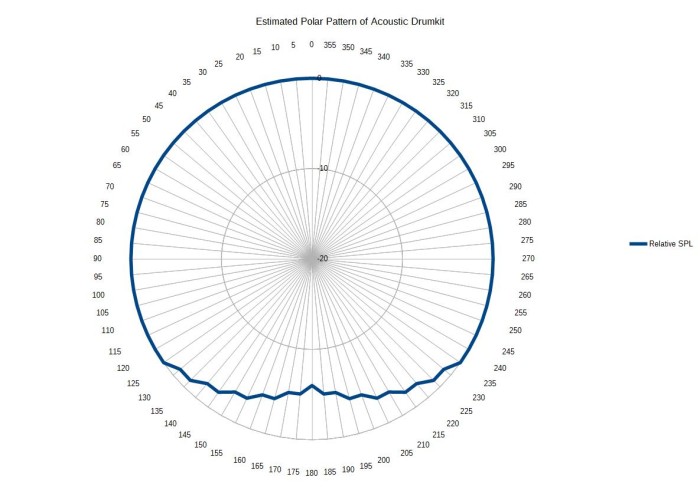

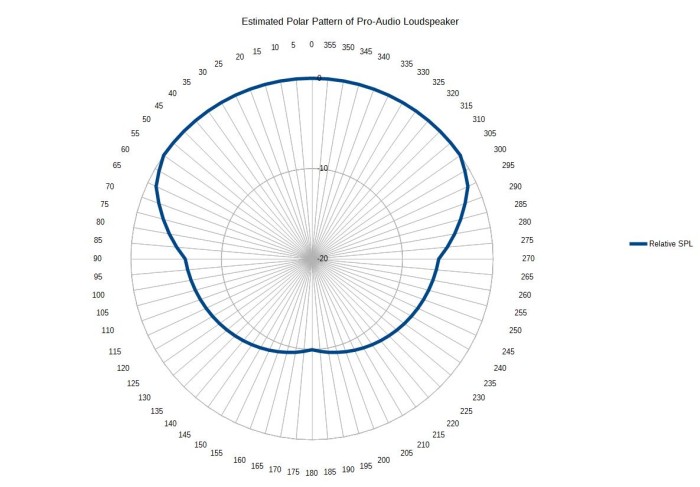

For instance, here’s a subwoofer sitting all by itself. It’s pretty close to being omnidirectional at, say, 63 Hz.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.That’s great, and all, but what if we don’t want to toss all that low end around on the stage behind the sub? Well, first, we can put another sub in front of the first one. We put sub number 2 a quarter-wavelength in front of sub 1. (We do, of course, have to decide which frequency we’re most concerned about. In this case, it’s 63 Hz, so all measurements are relative to that frequency.) For someone standing in front of our sub-array, the front sub is about 4 ms early. By the same token, the folks on stage hear sub 2 as being roughly 4 ms late.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.Here’s where the “delay always pushes away from you” mind-screw becomes useful. If we delay subwoofer 2 by a quarter-wavelength, the folks on stage and the folks in the audience will BOTH get the effect of sub 2 being farther away. Because the sub is a quarter-wave too early for the audience, the delay will precisely line it up with subwoofer 1. However, because the second sub is already “late” for the folks on stage, pushing the subwoofer a quarter-wave away means that it’s now “half a wave” late. Being a half-wave late is 180 degrees out of phase, which means that our problem frequency will cancel when you’re standing behind the array. The folks in front get all the bottom end they want, and the performers on stage aren’t being rattled nearly as much.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.Radical, dude.

The Acoustical Foam TARDIS

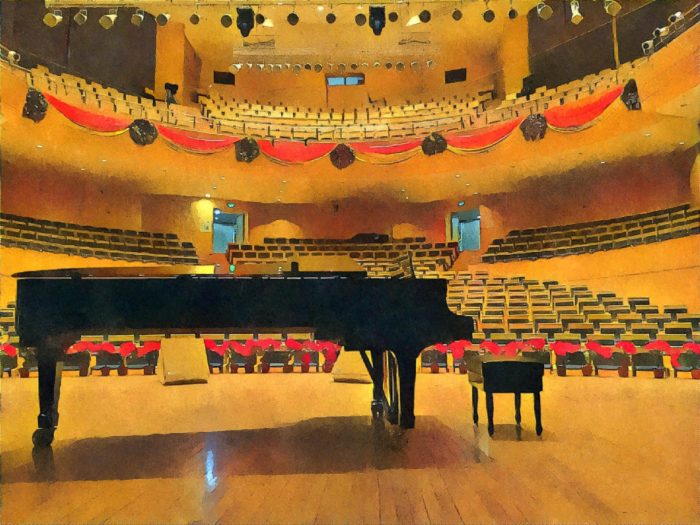

For my final trick, let me tell you about how to make a room acoustically larger by filling it up more. It’s very Dr. Who-ish.

What I’m getting at is making the room more absorptive. To make the walls of the room absorb more sound, we have to add something – acoustic foam, for instance. By adding that foam (or drape, or whatever), we reduce the amount of sound that can strike a boundary and re-emit into the space. In a certain way, reducing the SPL and high-frequency content of reflections makes the room larger. Reduced overall reflection level is consistent with audio that has had to travel farther to reach the listener, and high-frequency content is particularly attenuated as sound travels through air.

So, in an acoustic sense, reducing the room’s actual physical volume by adding absorptive material actually makes the room “sound” bigger. Of course, this doesn’t always register, because we’re culturally used to the idea that large spaces have a “loud” reverberant field. We westerners tend to build big buildings that are made of things like stone, metal, glass, and concrete – which makes for a LOT of sound reflectance.

It might be a little bit better to say that increased acoustical absorption makes an interior sound more and more like being outside. Go far enough with your acoustic treatment (whether or not this is a good idea is beyond the scope of this article), and you could acoustically go outdoors by entering the building.

Whoa.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.