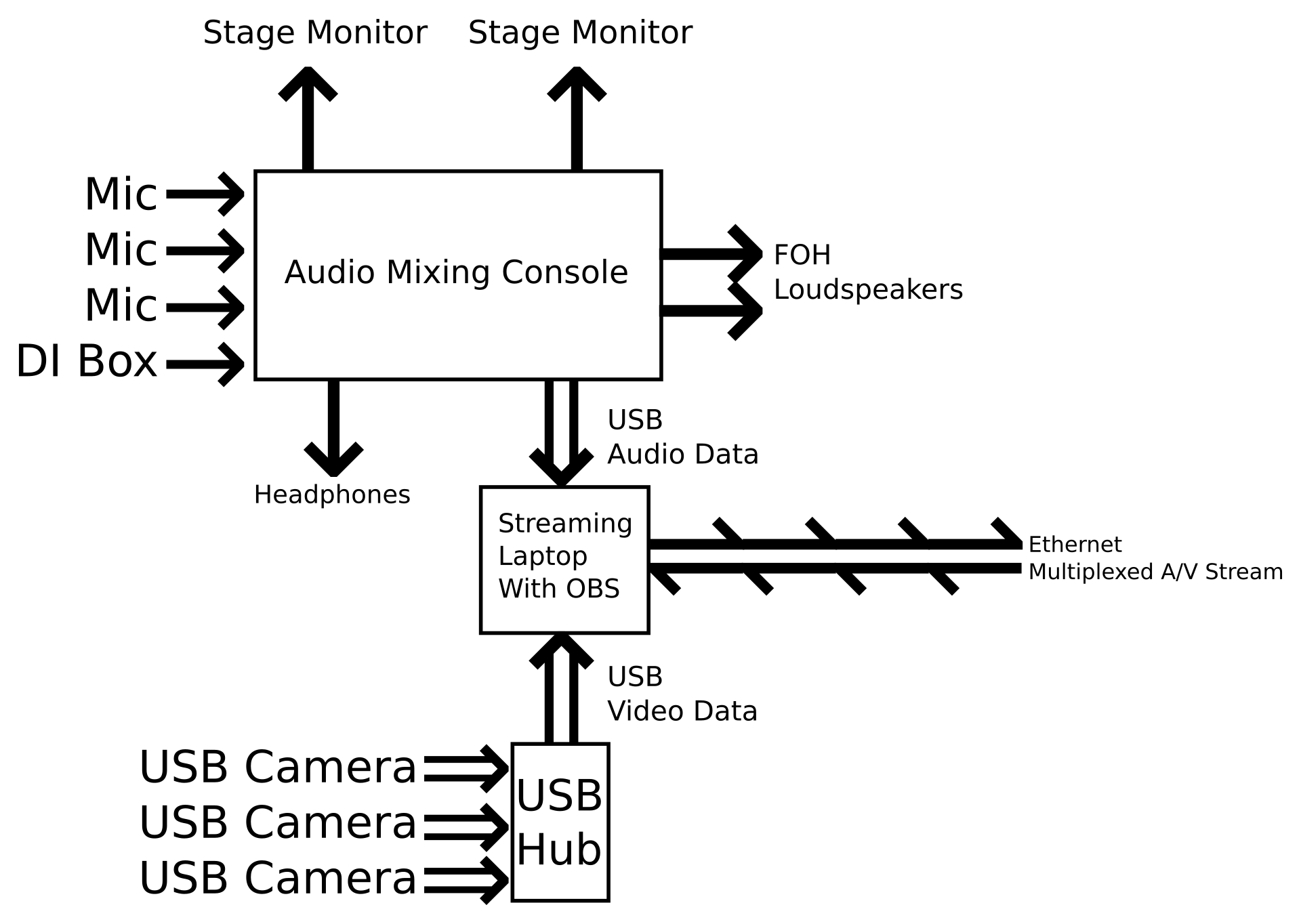

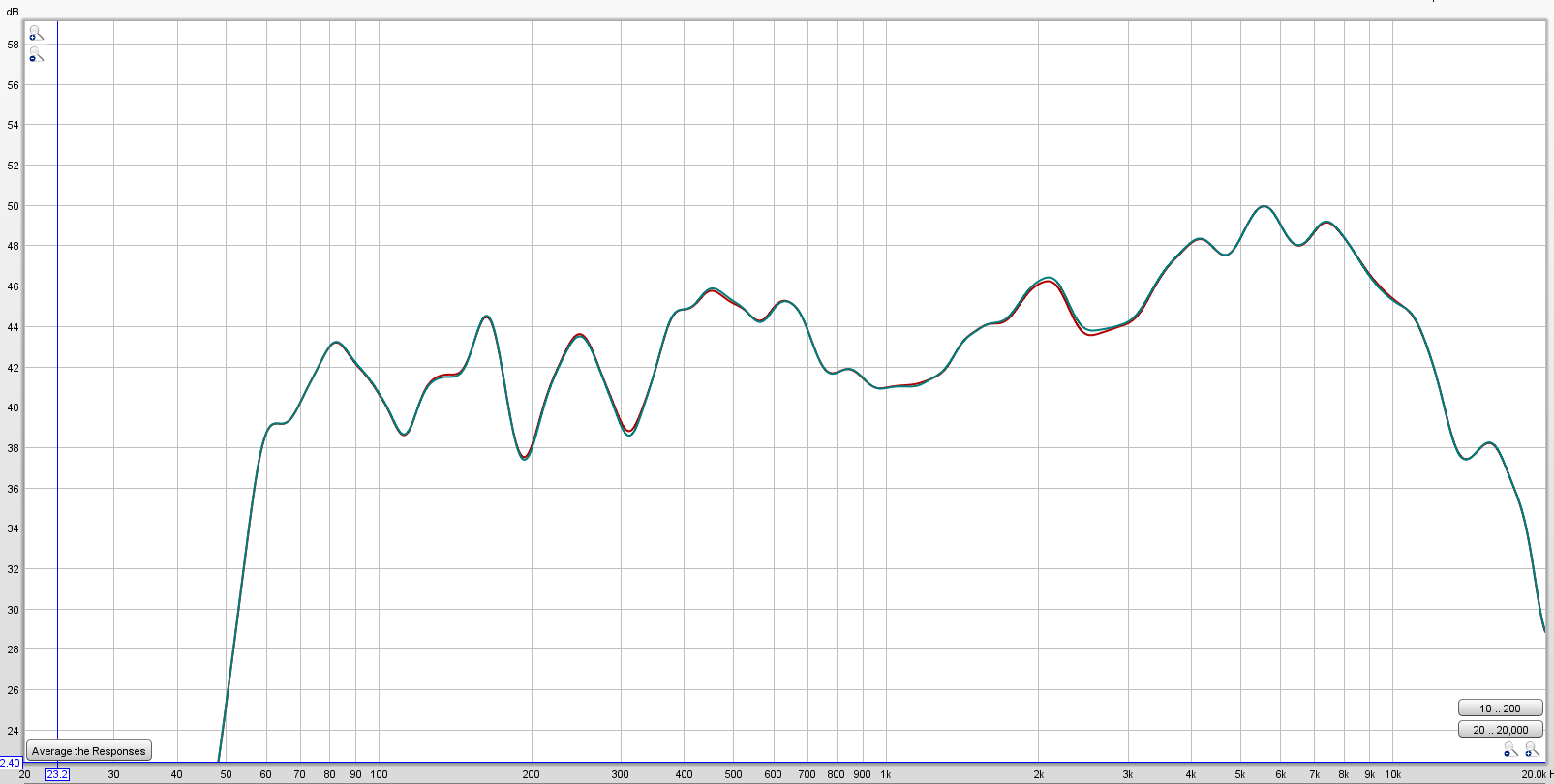

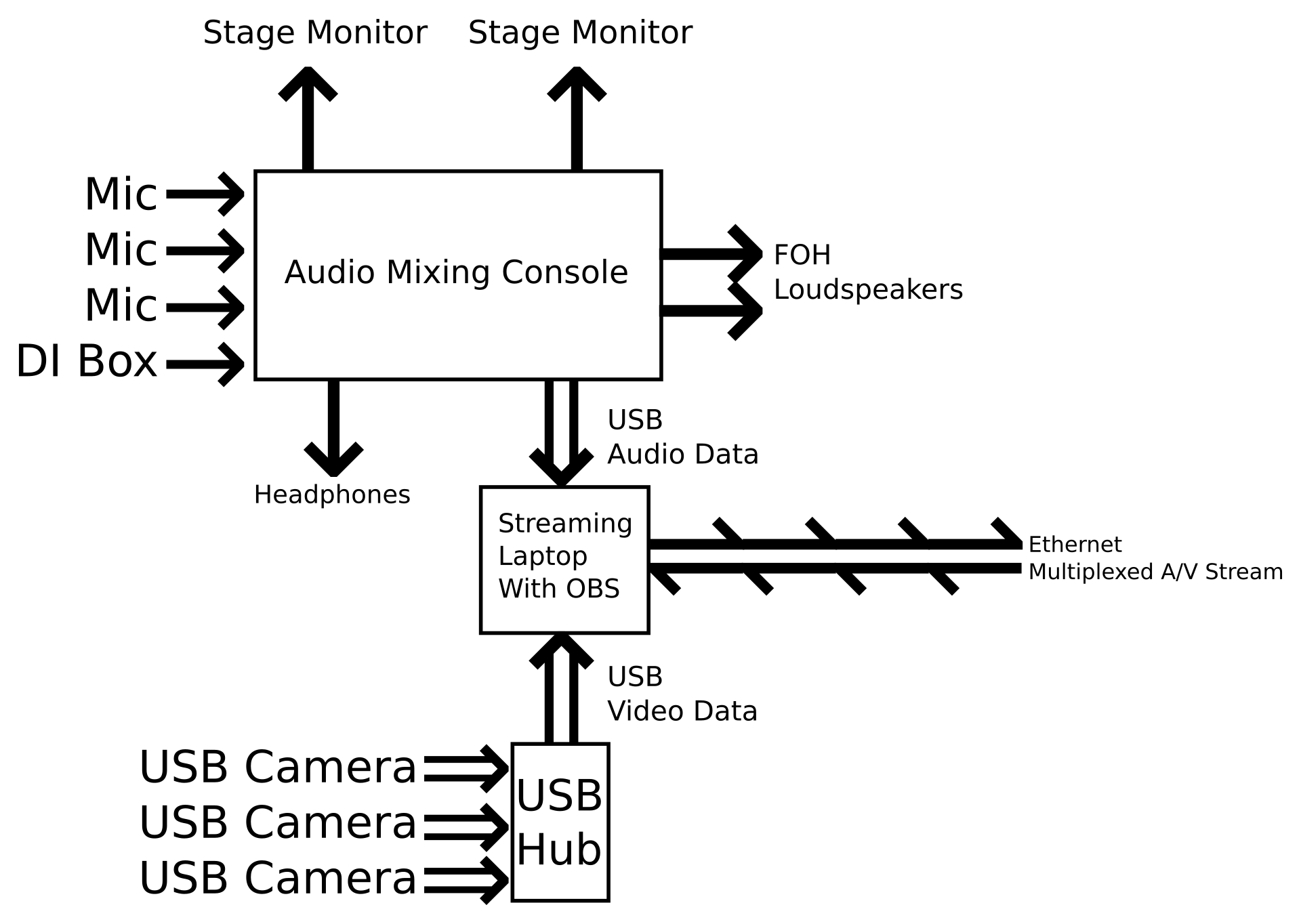

A few days ago, I was contacted by Forest Aeon on Patreon. Forest asked me to go into detail about the streaming setup I’ve been using for Carolyn’s Garden concerts during these days of “pandemia.” I was tickled to be asked and happy to oblige. So, first things first. Here’s a diagram:

But what does it all mean?

To start with, streaming requires audio and video inputs. Those inputs are fed to hardware or software that can combine them into a multiplexed audio/ video stream, and then that stream is sent to an endpoint – like Facebook, YouTube, or (in my case) Restream.io

For my purposes, the audio required a good bit of heavy lifting. High production-values were a must, and those standards – while high everywhere – had to meet different needs at multiple destinations. The musicians needed multiple mixes for stage monitors, the small audience present at Carolyn’s needed something they could listen to, the streaming laptop also needed an appropriately processed version of the main mix, and I needed to monitor that feed to the laptop.

With all those needs, a well-appointed mixing console was a must. An X32 was the natural choice, because it has the routing and processing necessary to make it all happen. There were mixes for the individual musicians, a mix for the live audience, and crucially, a mix for the stream that “followed” the main mix but had some independence.

What I mean by that last phrase is driven by signal flow. The stream mix is post-fader, just like the main mix, so if I put more of a channel into the main mix, that channel will also be driven harder in the stream. This makes sense to have in place, because a solo that needs a little push for the live audience should also get a push for the remote audience. At the same time, I allowed a good bit of margin in where those post-fader sends could be placed. The reason for that was to deal with the difference between a stream mix that is far more immune to acoustic contributions in the room than a live mix. In the live mix, a particular instrument might be “hot” in the monitors, and only need a bit of reinforcement in the room. However, that monitor bleed is not nearly as prevalent for the mix to the stream, so that particular channel might need to be “scaled up” to create an appropriate blend for the remote listeners.

Another reason for a separate stream mix was to be able to have radically different processing for the mix as a whole. Just as a starter, the stream mix was often delayed by 100ms or more to better match the timing of the video. If the stream mix was just a split of the main output, that would have meant a very troublesome delay for the audience in the garden. Further, the stream mix was heavily compressed in order for its volume to be consistently high, as that’s what is generally expected by people listening to “playback.” Such compression would have been quite troublesome (and inappropriate, and unnecessary) for the live audience.

The mix for the stream was directed to the console’s option card, which is a USB audio interface. That USB audio was then handed off to the streaming laptop, which had an OBS (Open Broadcast Studio) input set to use the ASIO plugin available for OBS. All other available audio from the laptop was excluded from the broadcast.

Video managed to be both quite easy and a little tricky, just in divergent ways. On the easy side, getting three – very basic – USB cameras from Amazon into a USB hub and recognized by OBS was pretty much a snap. However, the combined video data from all three cameras ended up saturating the USB bus, meaning that I ended up setting the cameras to shut themselves off when not in use. Transitions from camera to camera were less smooth, then, as the camera being transitioned from would abruptly shut off, but I could keep all three cameras available at a moment’s notice.

With OBS I could combine those camera feeds with the mixer audio, plus some text and graphics, and then encode the result into an RTMP stream to Restream.io (As an aside, a very handy feature of OBS is the Scene Collection, which allowed me to have a set of scenes for each act. In my case, this made having a Venmo address for each act much easier, because switching to the appropriate collection brought up the correct text object.)

A very big thing for me was the manner in which the laptop was connected to the public Internet. I was insistent on using a physical patch cable, because I simply don’t trust Wi-fi to be reliable enough for high-value streaming. That’s not to say I would turn down wireless networking in a pinch, but I would never have it as my first option. Luckily, Cat6 patch cable is pretty darn cheap, being available for about $0.25 per foot. A 100′ cable, then, is all of $25. That’s awfully affordable for peace of mind, and drives home the point that it takes very expensive wireless to be as good as a basic piece of wire.

So, there you have it: My streaming setup for summer concerts.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.