Pushing 10 dB ahead of stage garble can be punishing for the crowd.

Please Remember:

The opinions expressed are mine only. These opinions do not necessarily reflect anybody else’s opinions. I do not own, operate, manage, or represent any band, venue, or company that I talk about, unless explicitly noted.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.

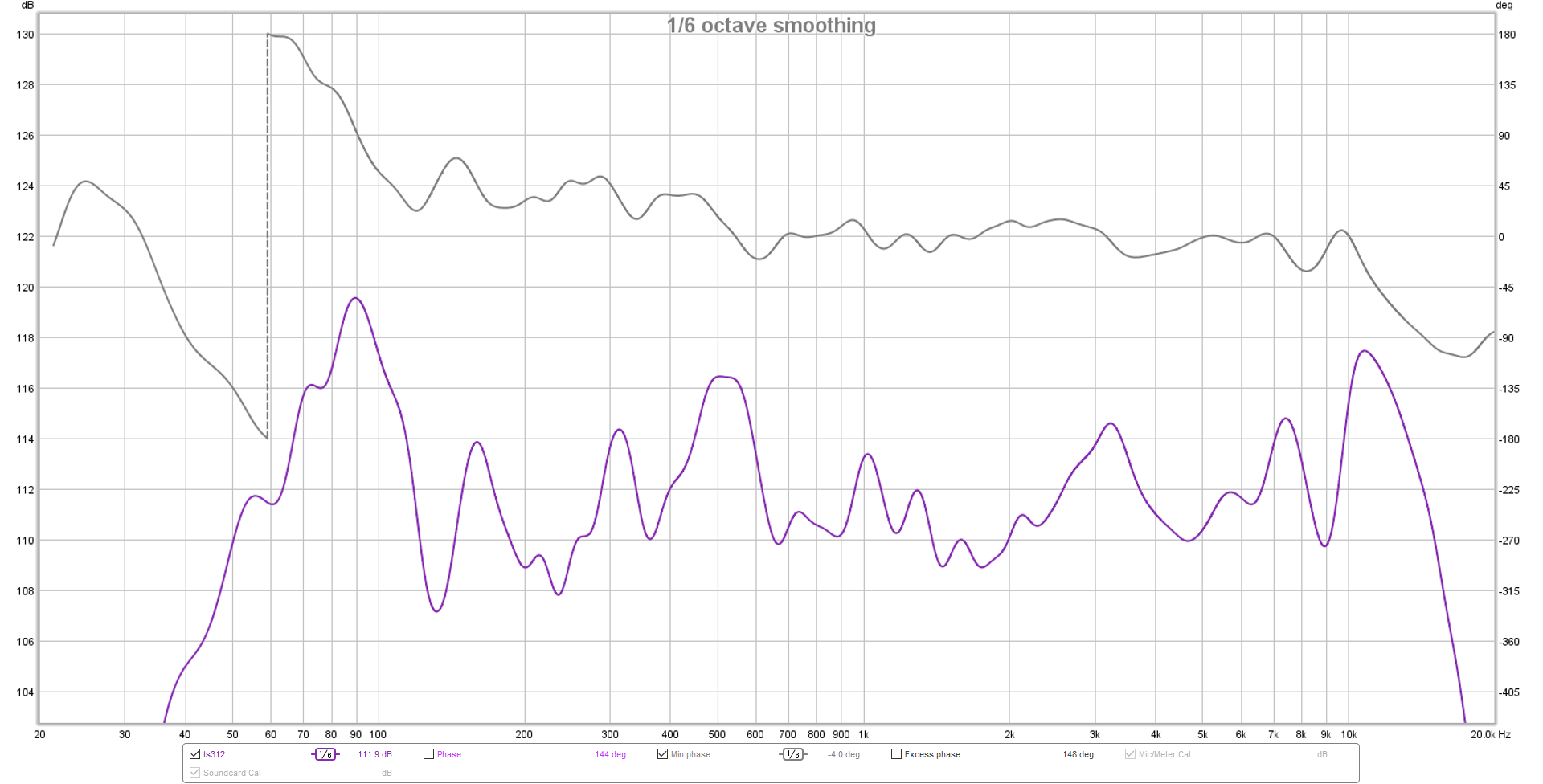

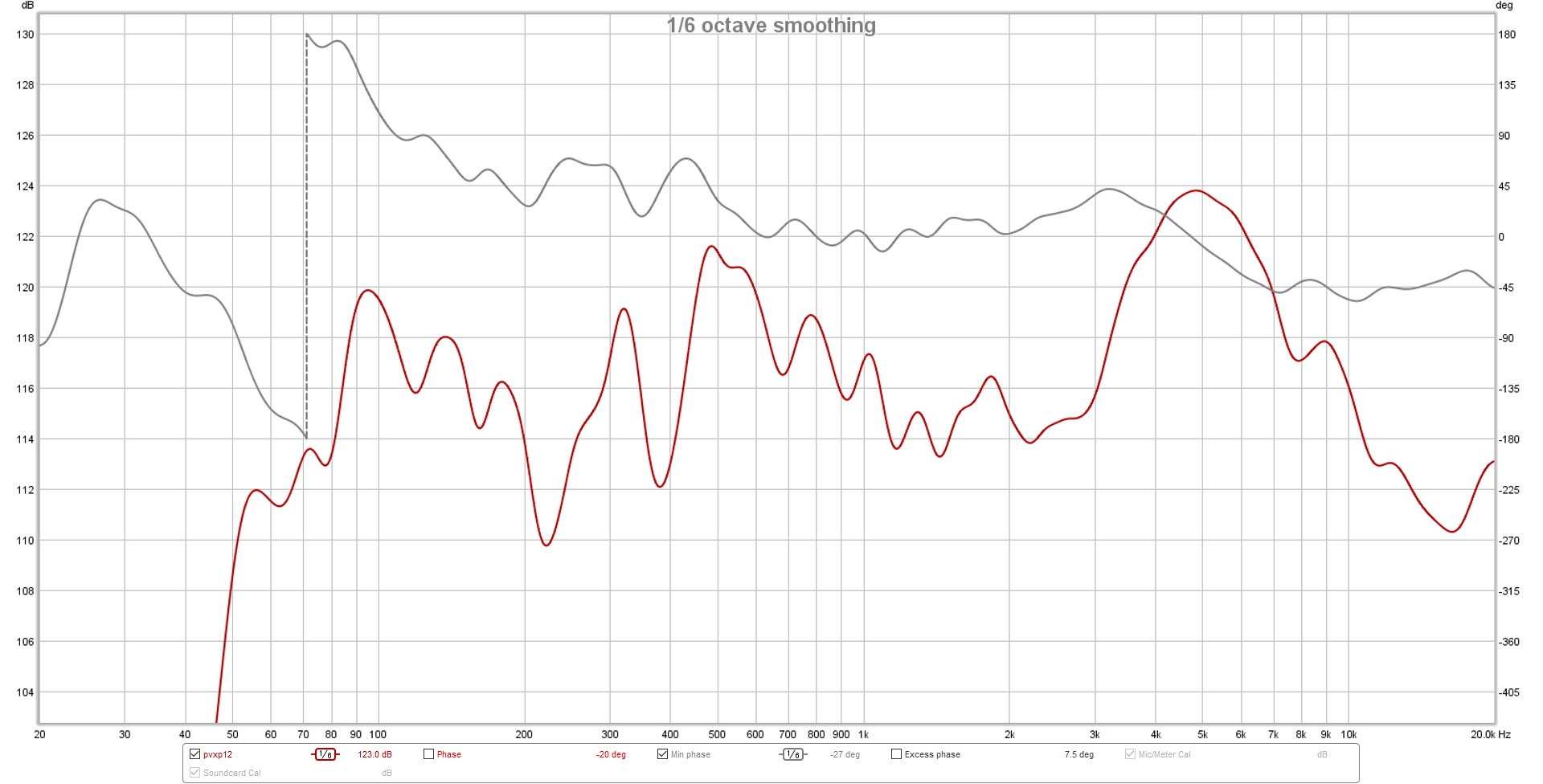

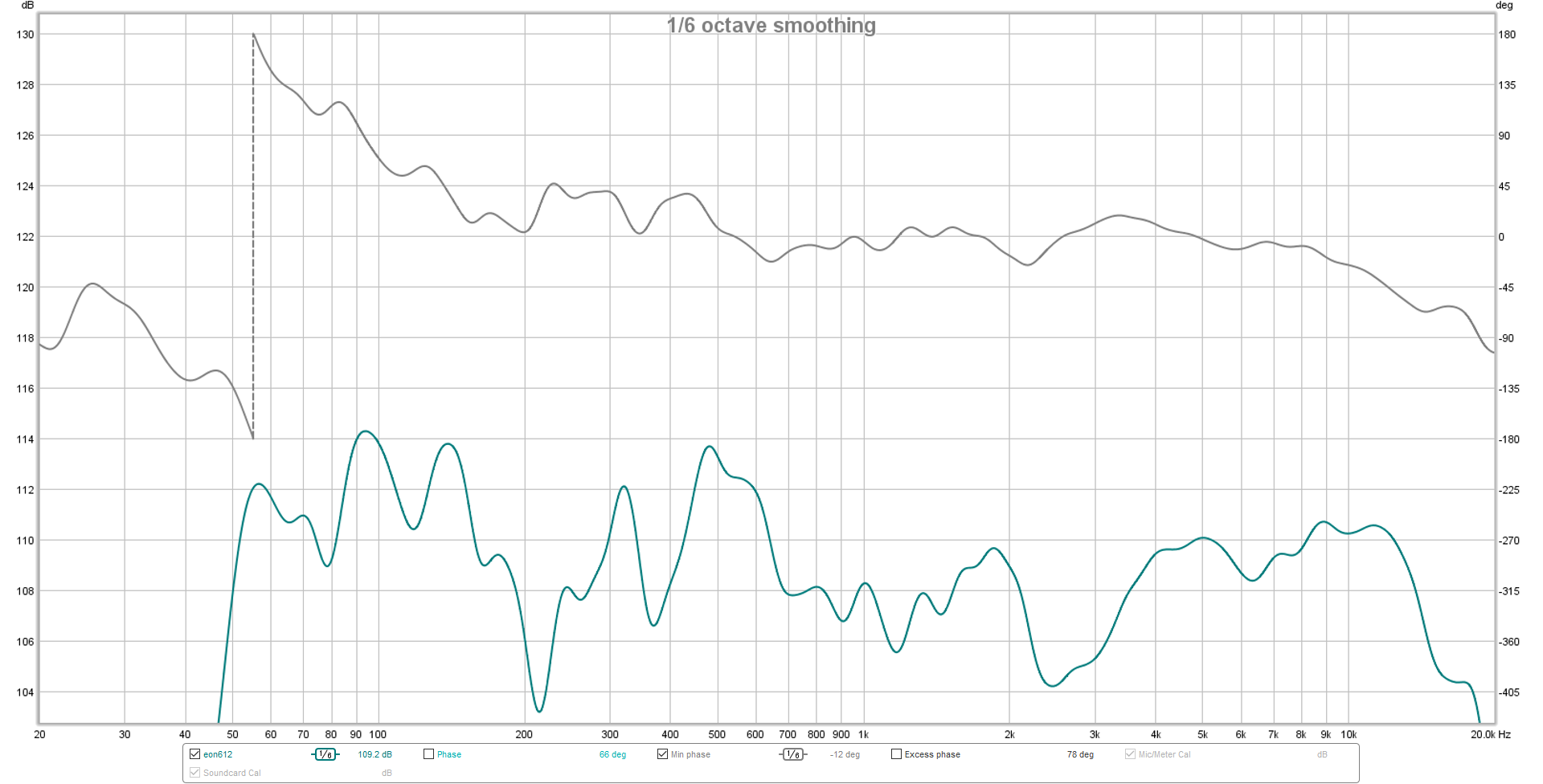

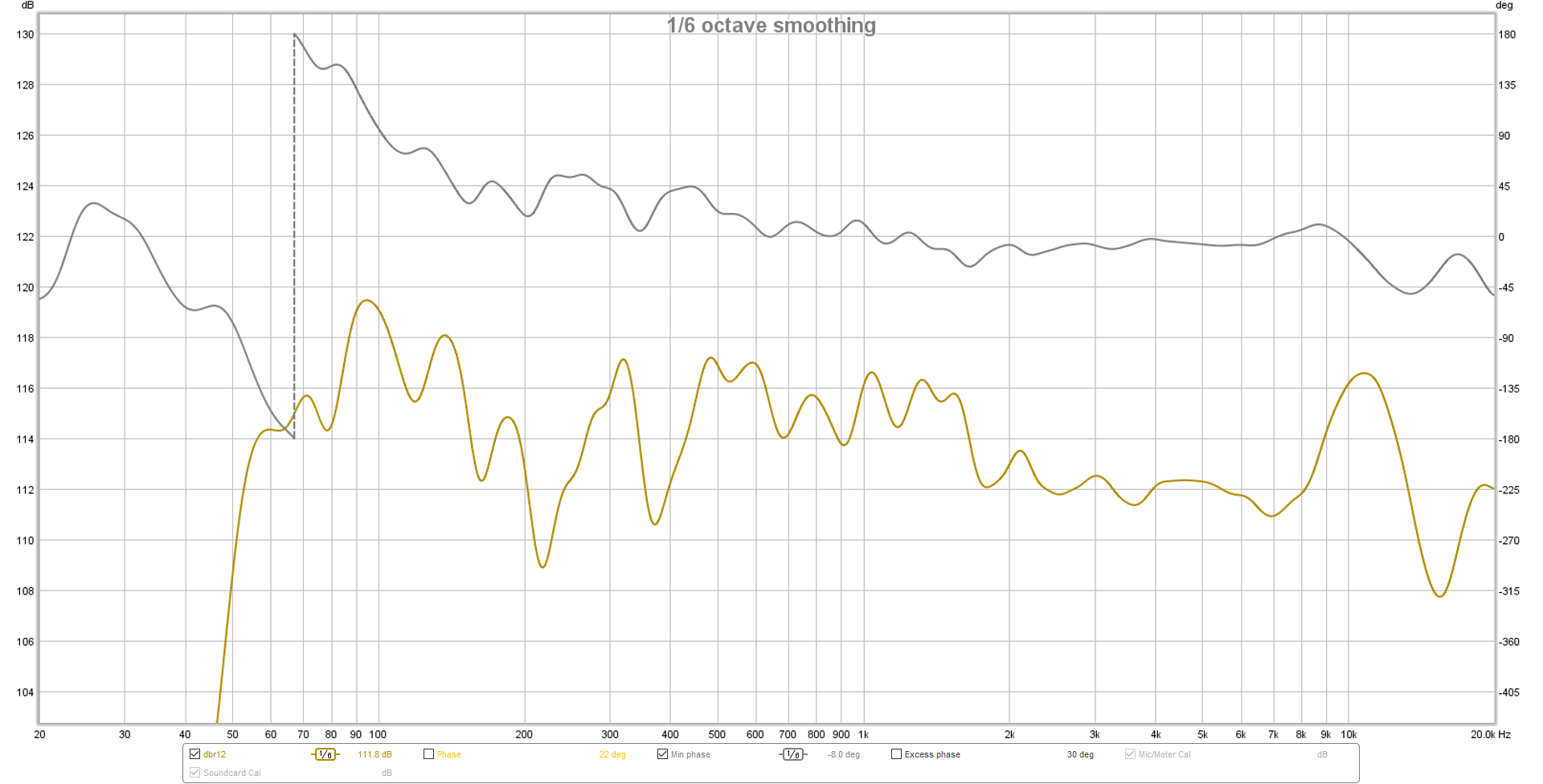

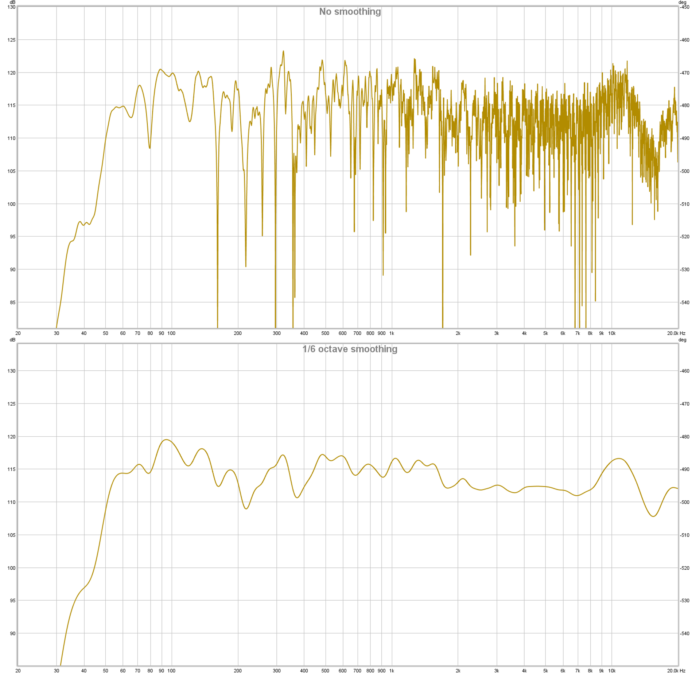

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.I believe it was Mark Hellinger who once said that audio engineers don’t feel like they’ve got real control over a show until the PA is 10 dB louder than everything else. I’m pretty sure he was right, both due to my own experience and a bit of SPL math.

When adding SPL levels, you use the formula: 10 * log10(10^SPLa/10 + 10^SPLb/10 + …) to get your answer. So, if the sound from the stage is 100 dB SPL, and the PA is also making 100 dB SPL, you get 10 * log10(10^10 + 10^10), or 103 dB SPL. The implication there – beyond things simply getting louder – is that the sonic contribution from the stage is quite large in proportion to the FOH PA system. Wind up FOH to 110 dB SPL, and something curious happens. You end up with 10 * log10(10^10 + 10^11), or 110.4 dB. See that? The 110 dB from FOH essentially overwhelms the wash from the deck.

Great, right?

But think about how loud that is. Then, think about how loud it is if the band is REALLY cookin’, and monitor world is on the gas. You might have a band making 105 dB SPL or more. Thus, if you want to “get control” with FOH, you have to get things barking at 7 – 10 dB above that level. One hundred and fifteen decibels across the board (not just subwoofer material) is not much fun to most folks.

You have to watch out for your tendency to try for an FOH takeover all the time. It will often be your first instinct. It’s often mine. Even though I’ve managed to put it somewhat into check, I still have a strong bent towards guessing too high. I especially guess too high when there’s a lot of musically uncorrelated noise, like audience chatter. That’s not what I want to listen to, and I unconsciously build my mix around drowning out the conversation. Sometimes, folks are okay with that. At other times…not so much.

Be mindful of the numbers. Try to be careful. Have the idea that not being in total control of the sonic experience is okay, because the cost of total control may be marked discomfort for everyone around you.