Sound is a physical phenomenon, and is therefore quantifiable through measurement.

Please Remember:

The opinions expressed are mine only. These opinions do not necessarily reflect anybody else’s opinions. I do not own, operate, manage, or represent any band, venue, or company that I talk about, unless explicitly noted.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.

Want to use this image for something else? Great! Click it for the link to a high-res or resolution-independent version.I’m going to start by saying this: Something being measurable does NOT require the exclusion of a sense of beauty, wonder, and awe about it. Measuring doesn’t destroy all that. It merely informs it.

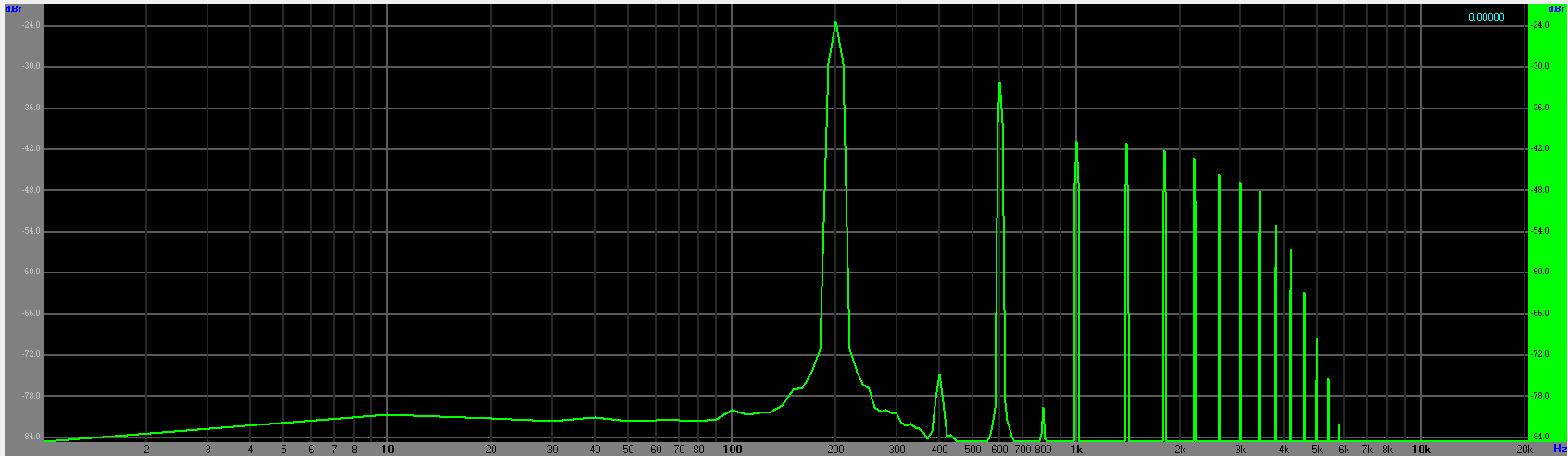

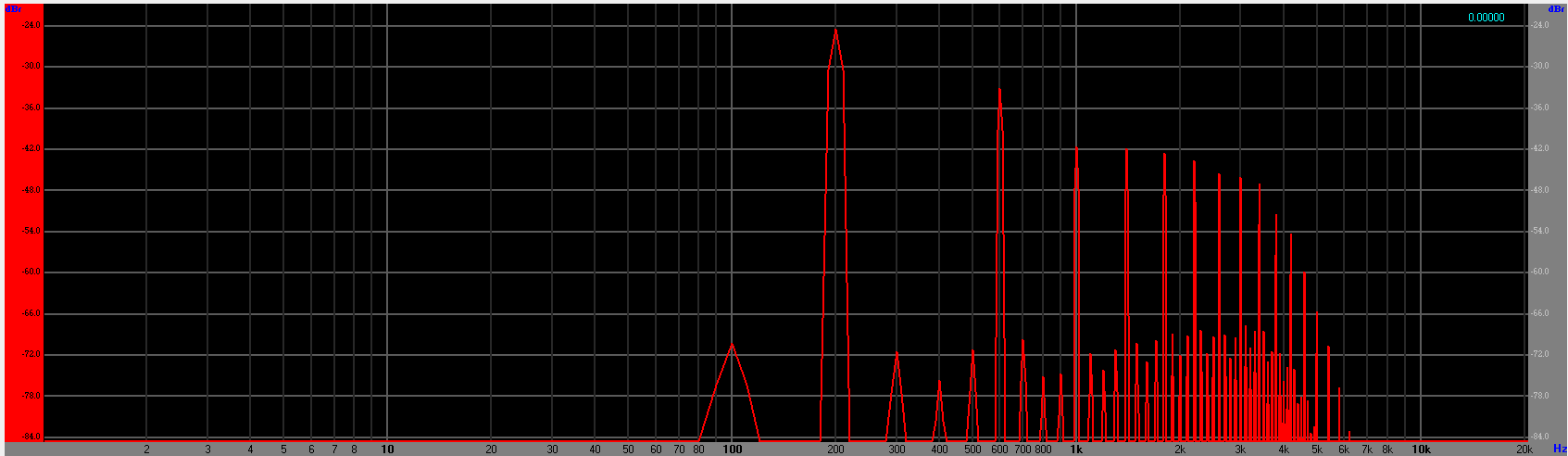

The next thing I’m going to say is that it drives me CRAZY how, in an industry that is all physics all the time, we manage to convince ourselves that there are extra-logical explanations for “stuff sounding better.” Horsefeathers! If your ears detected a real difference – a difference not generated entirely by your mind – then that difference can be measured and quantified. Don’t get me wrong! It might be very hard to measure and quantify. Designing the experiment might very well be non-trivial. You might not know what you’re looking for. Your human hearing system might be able to deal with contaminants to the measurement that a single microphone might not handle well.

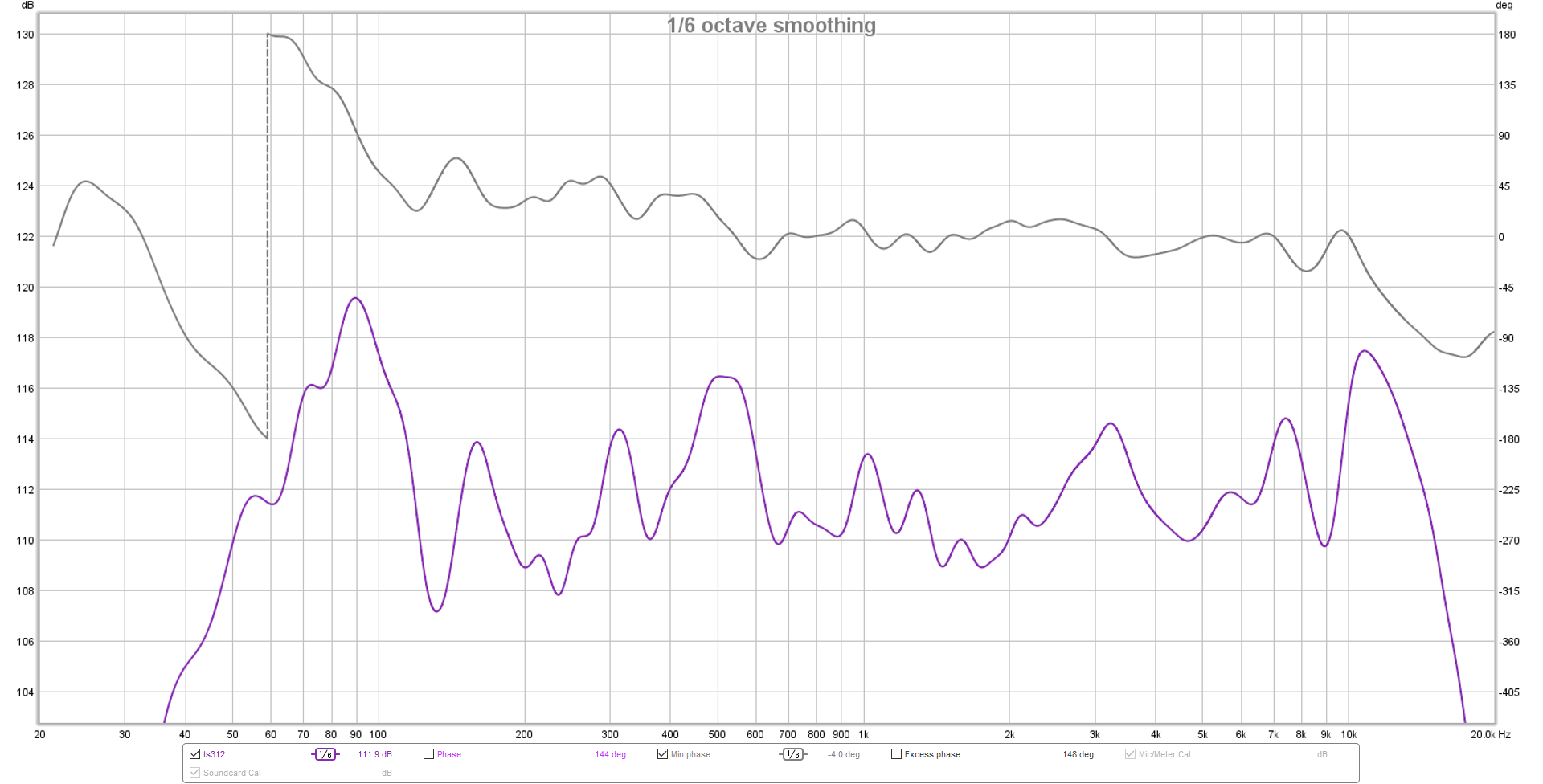

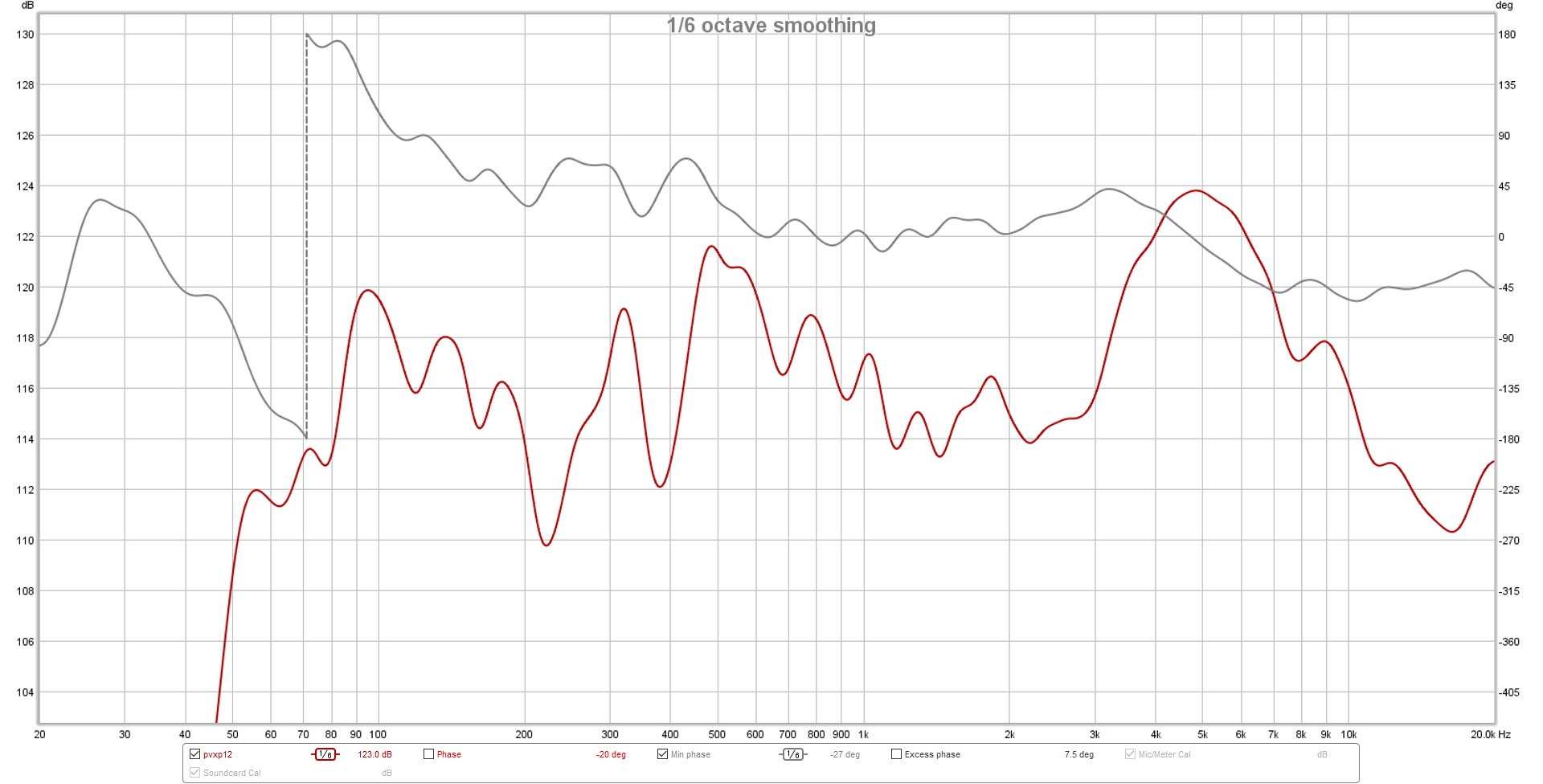

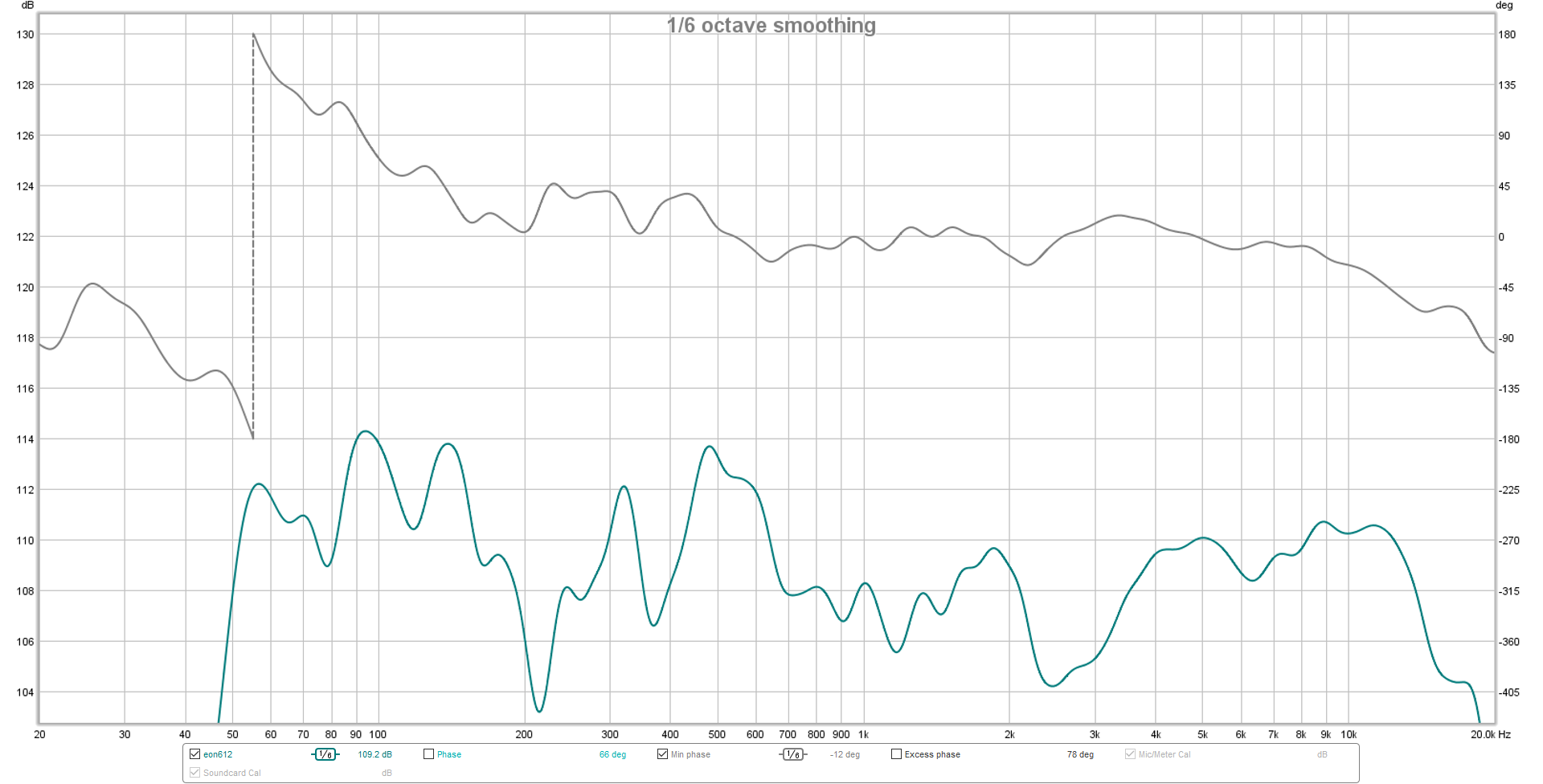

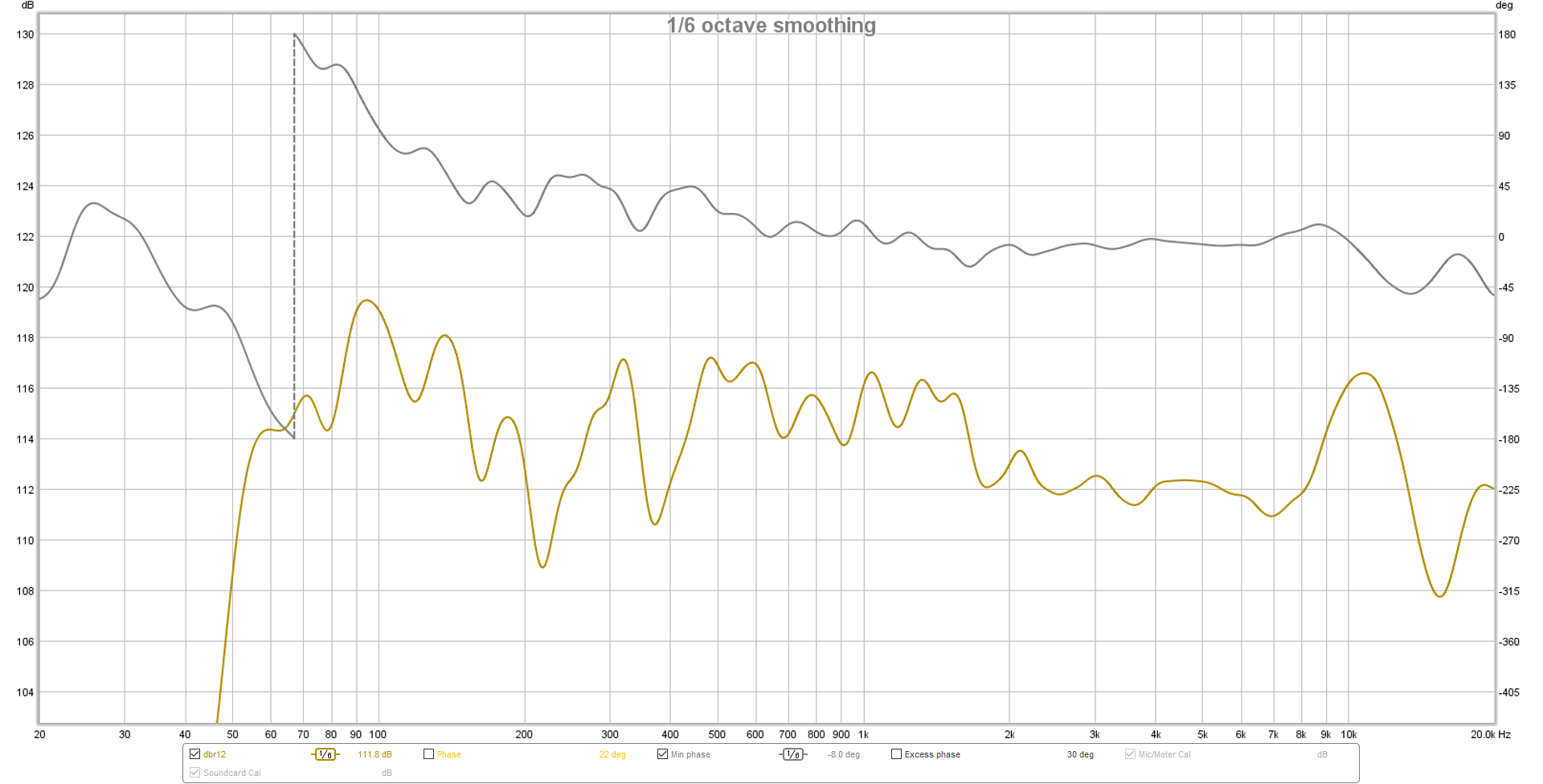

But whatever the difference is, if it’s real, it can definitely be described in terms of magnitude, frequency, and phase.

Take this statement: “We need to run the system through [x], because [person] ran their system through [x], and it all sounded a lot better.”

That’s fair to say. In this business, perception matters. But ask yourself, why did you like the system better when [x] was involved? There has to be a physical reason, assuming the system actually sounded different. If you deluded yourself into thinking there was a difference (because [x] is much more expensive than [y]), that’s a whole other discussion. Disregarding that possibility…

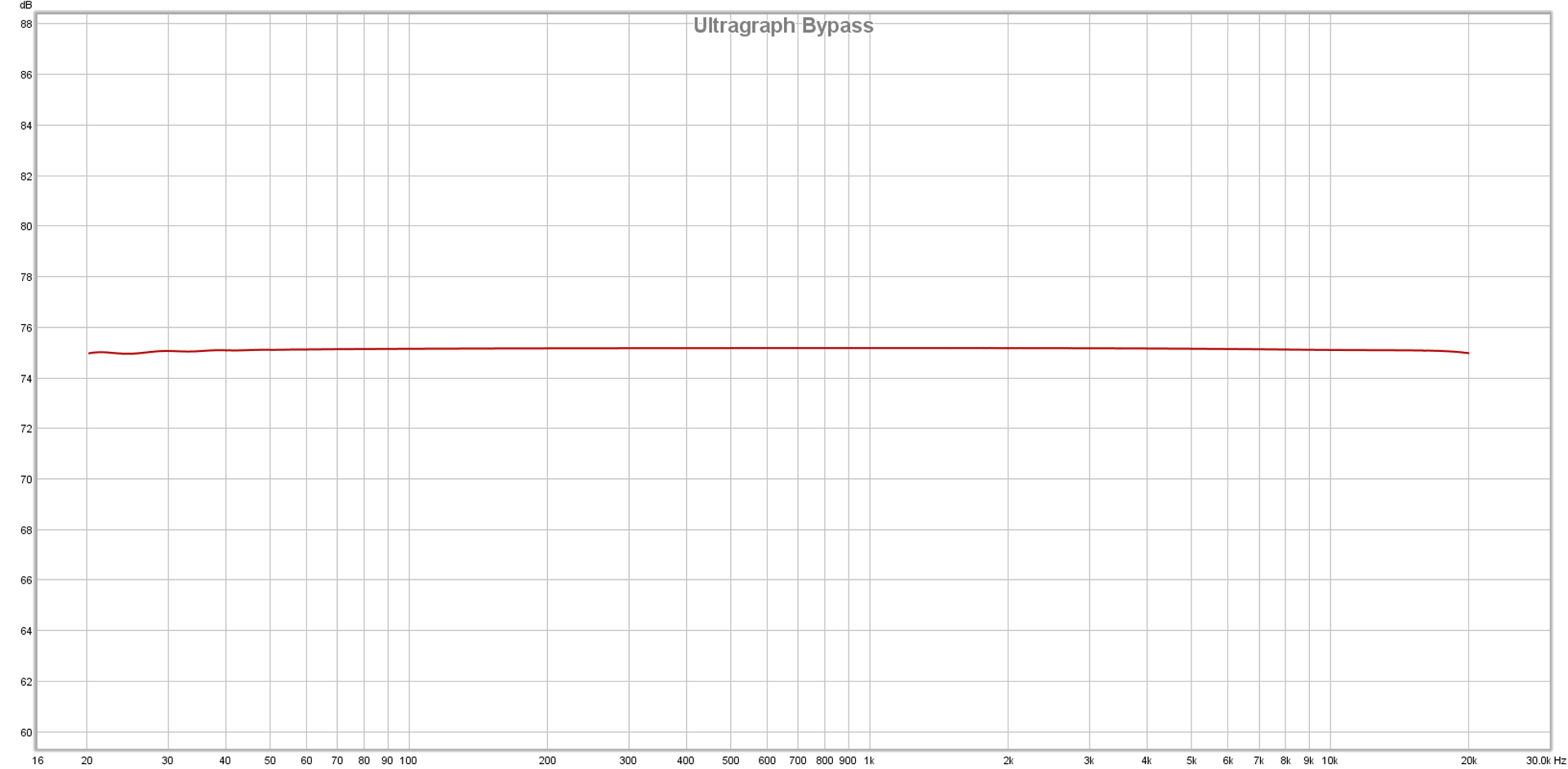

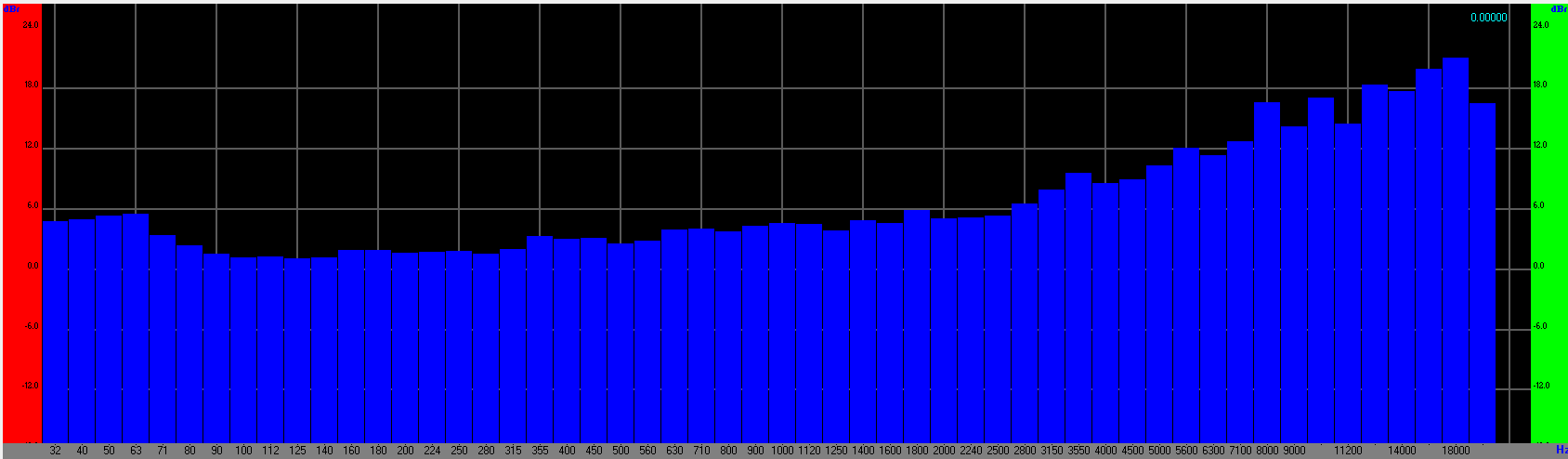

Did the system seem louder? As long as the overall SPL isn’t uncomfortable, and all other things are equal, audio humans tend to perceive a louder rig as being better. If something was actually louder, that can be measured. (Probably with tremendous ease and simplicity.)

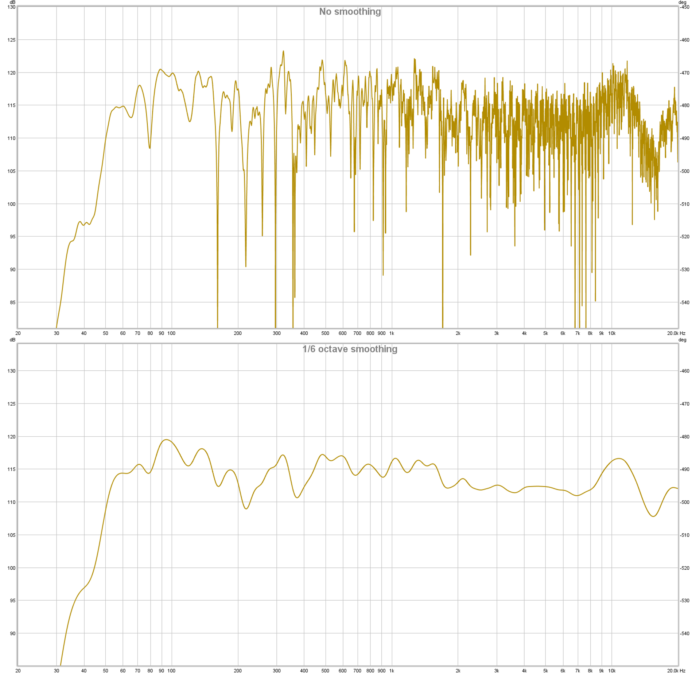

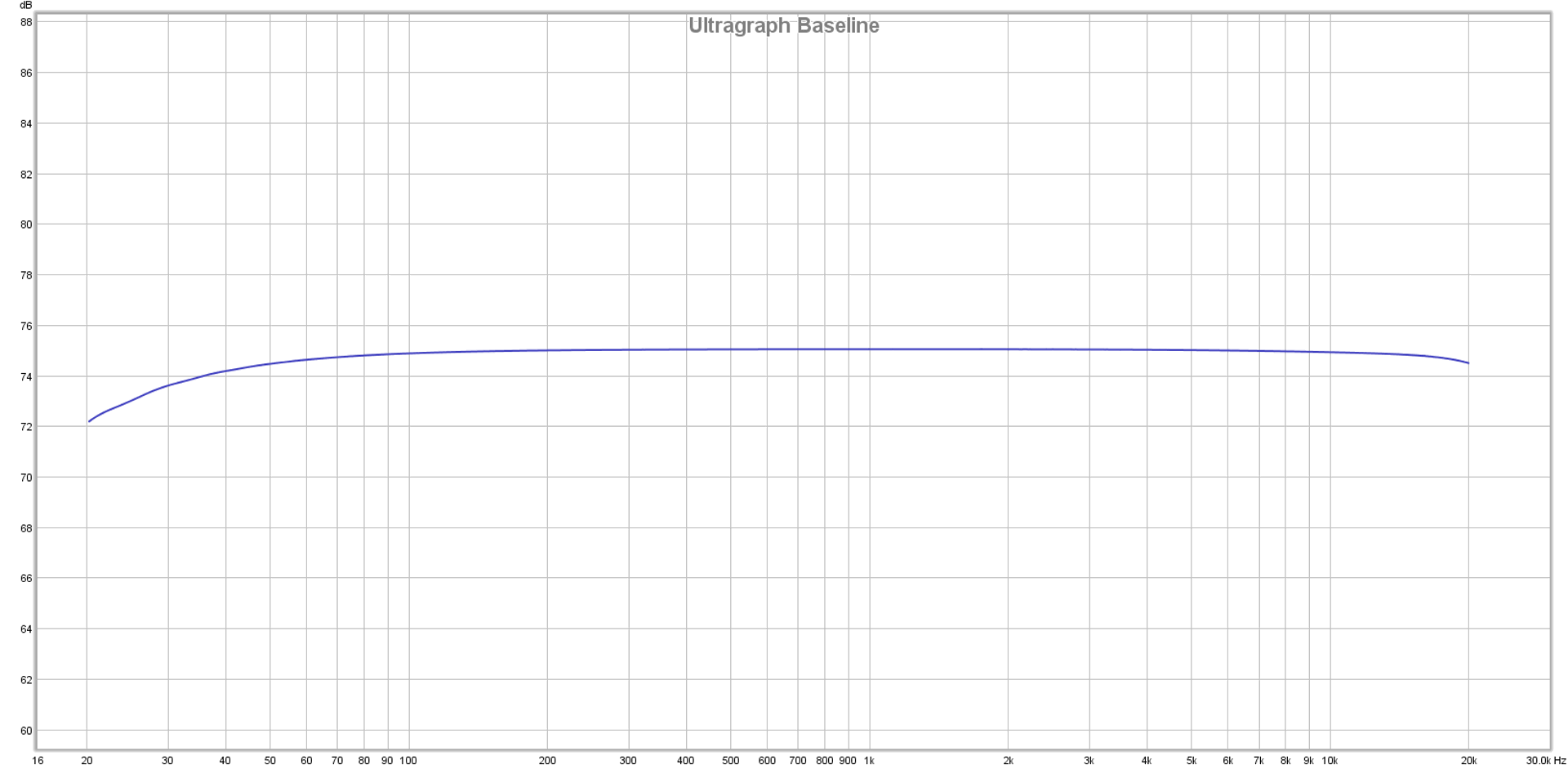

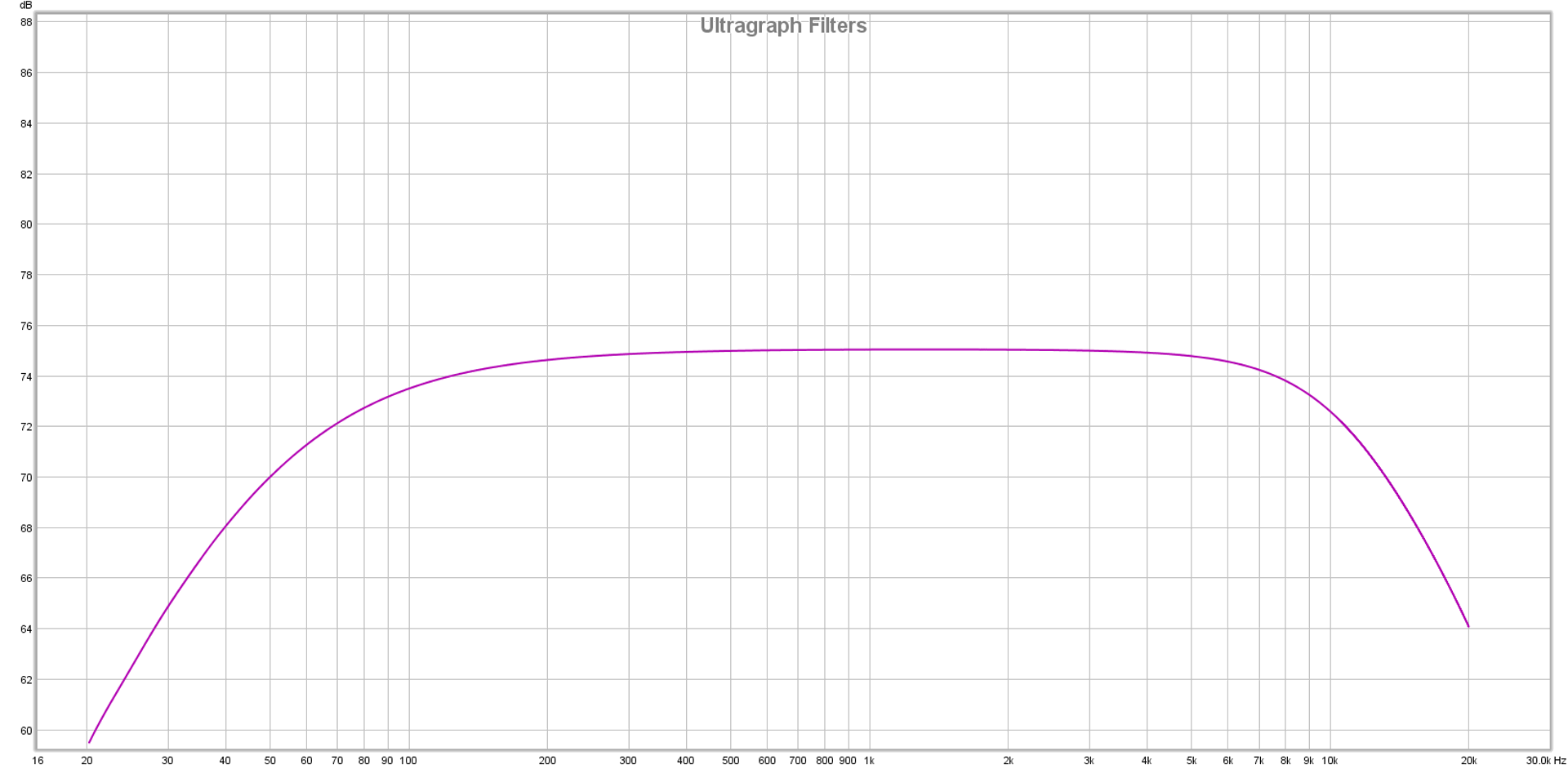

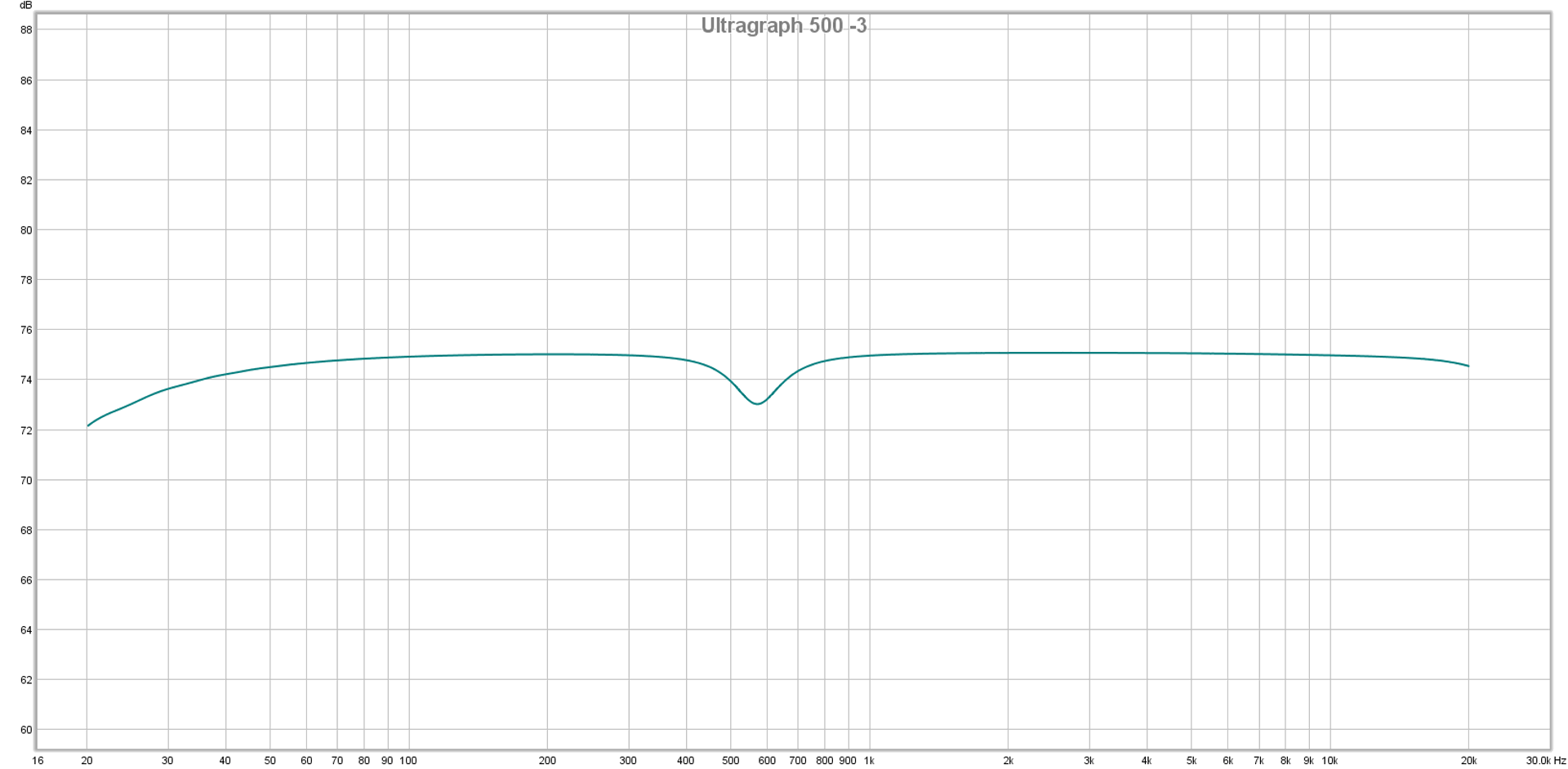

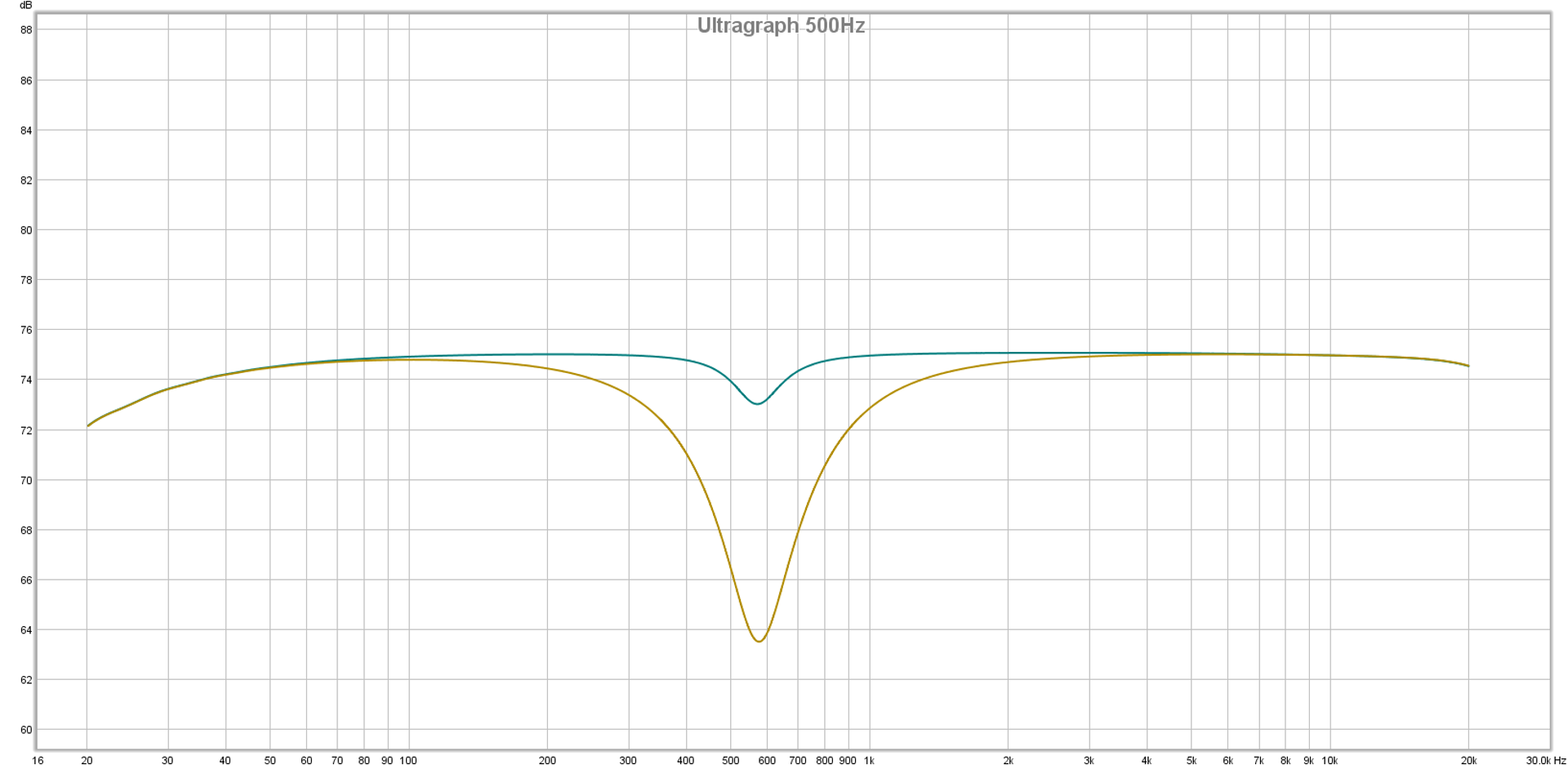

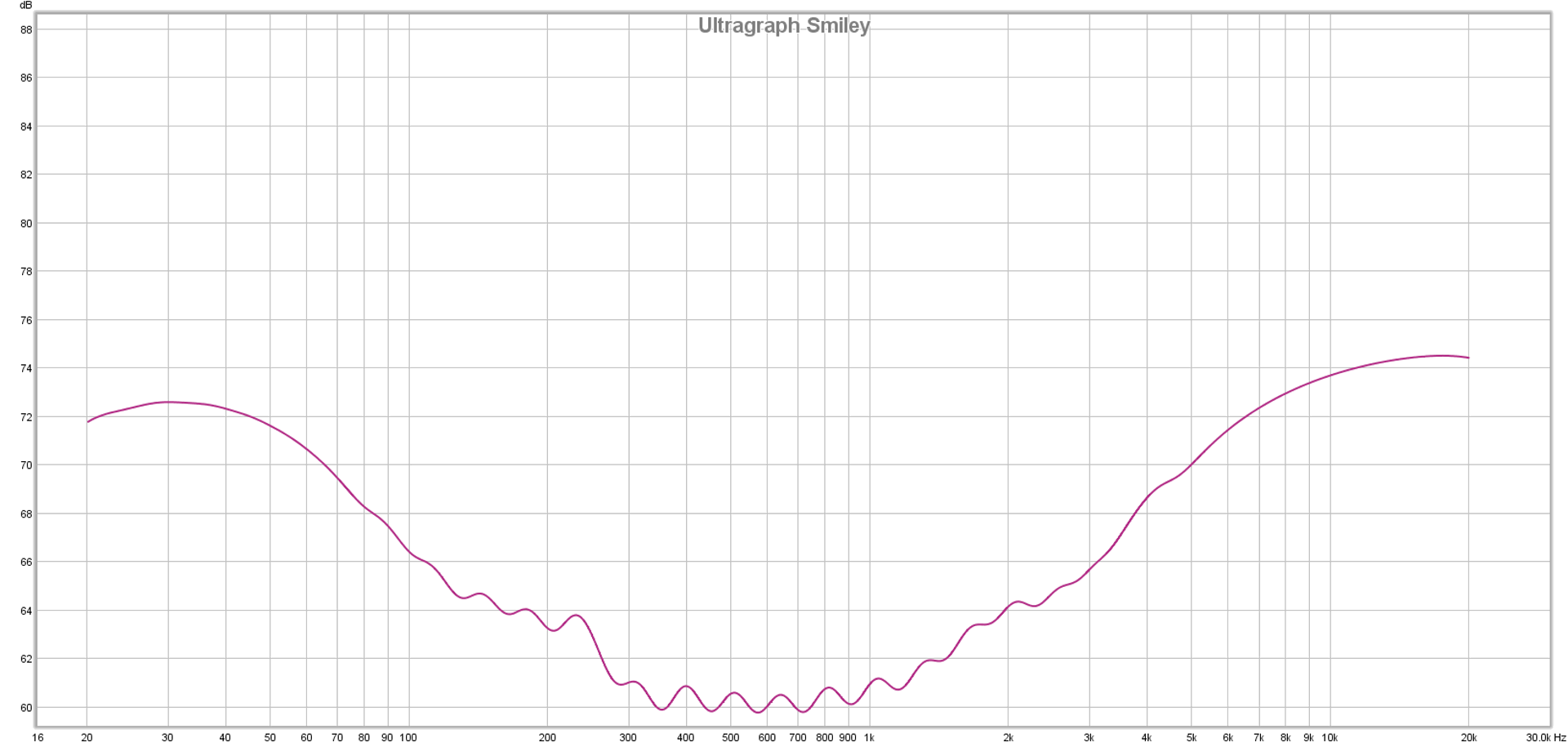

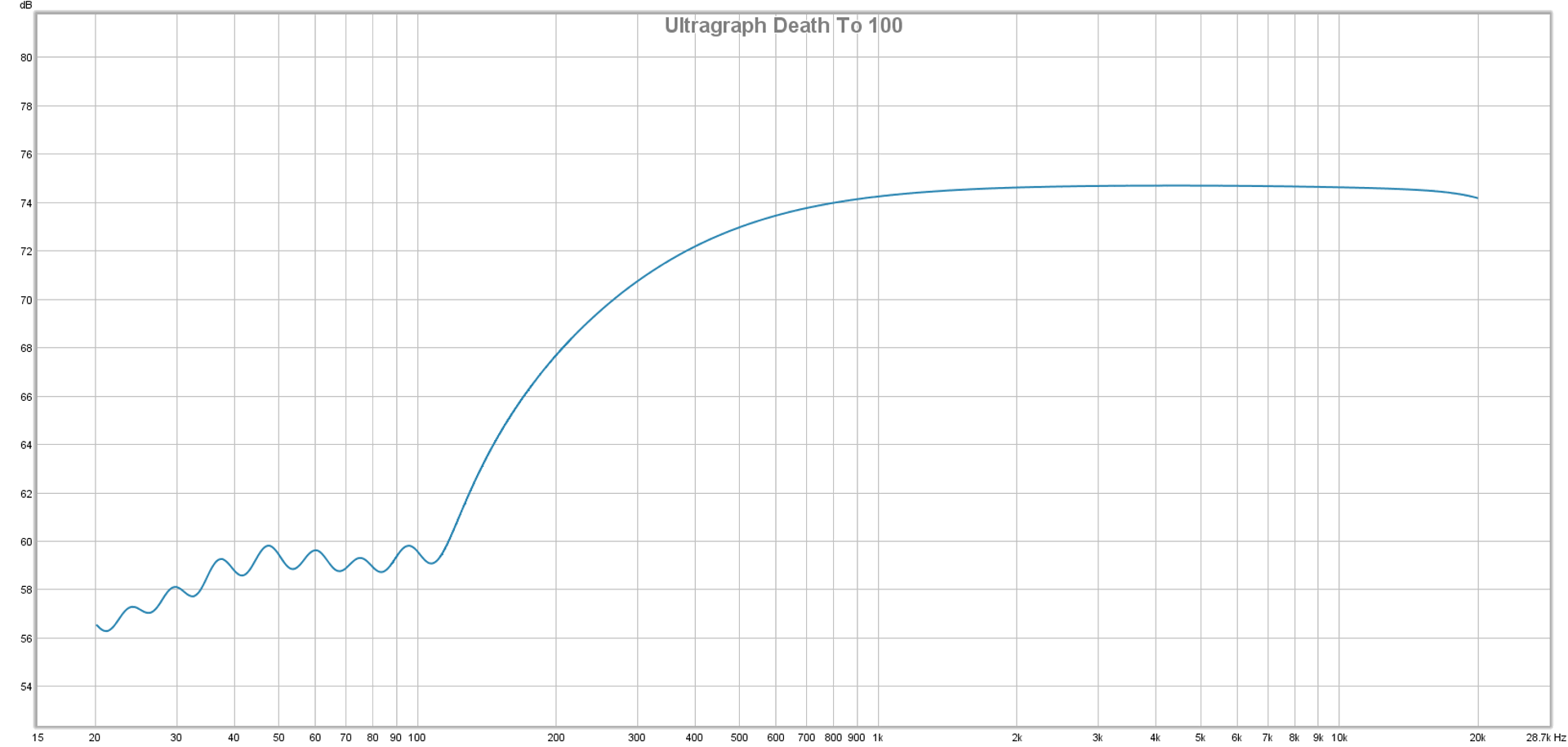

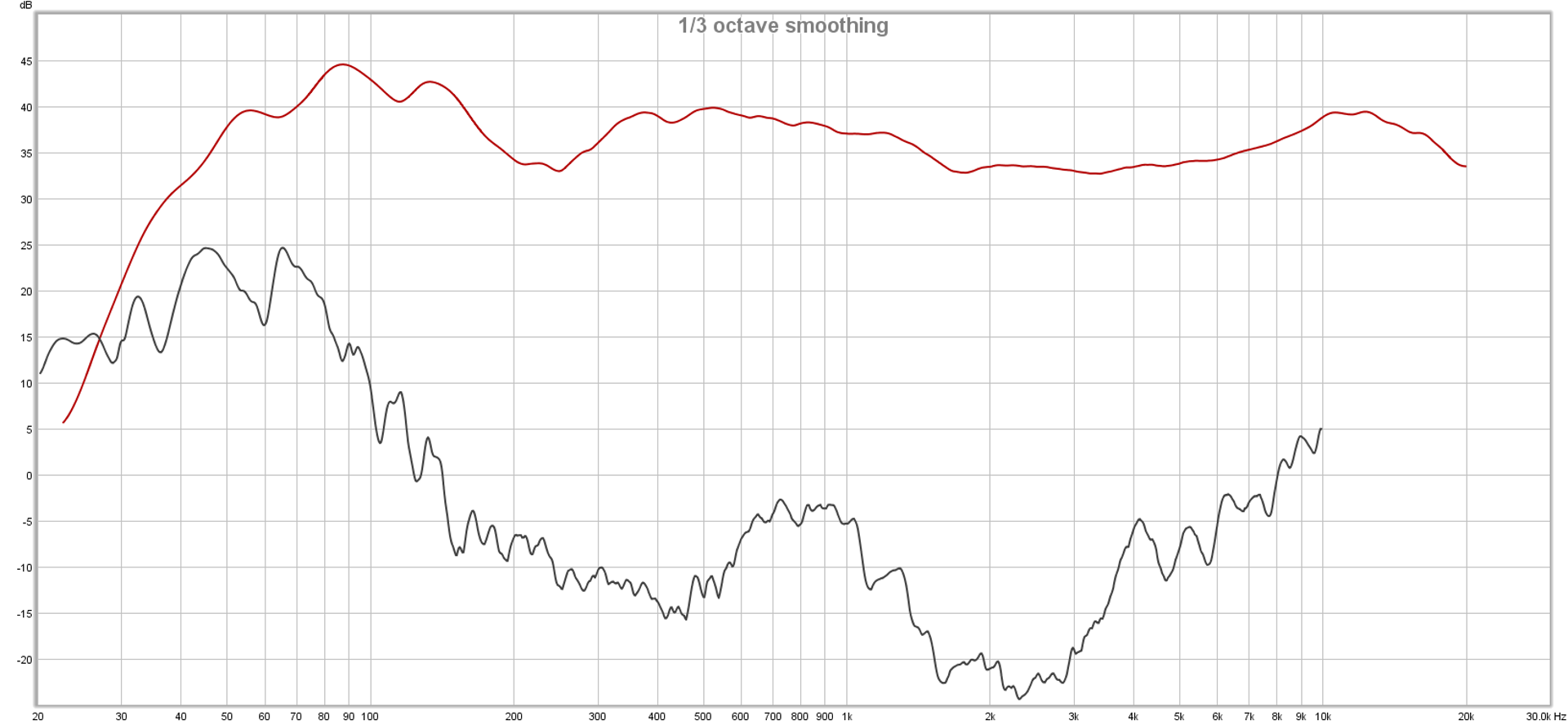

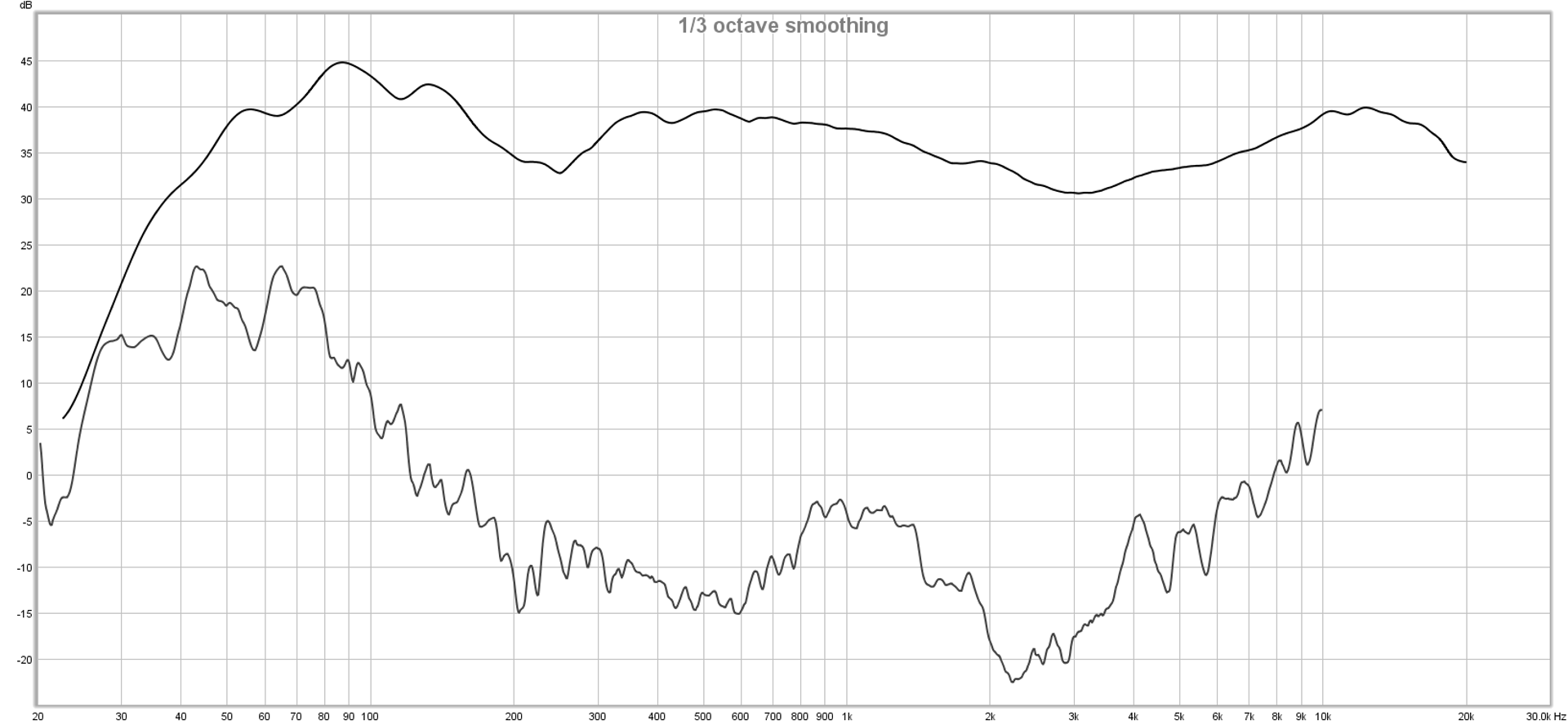

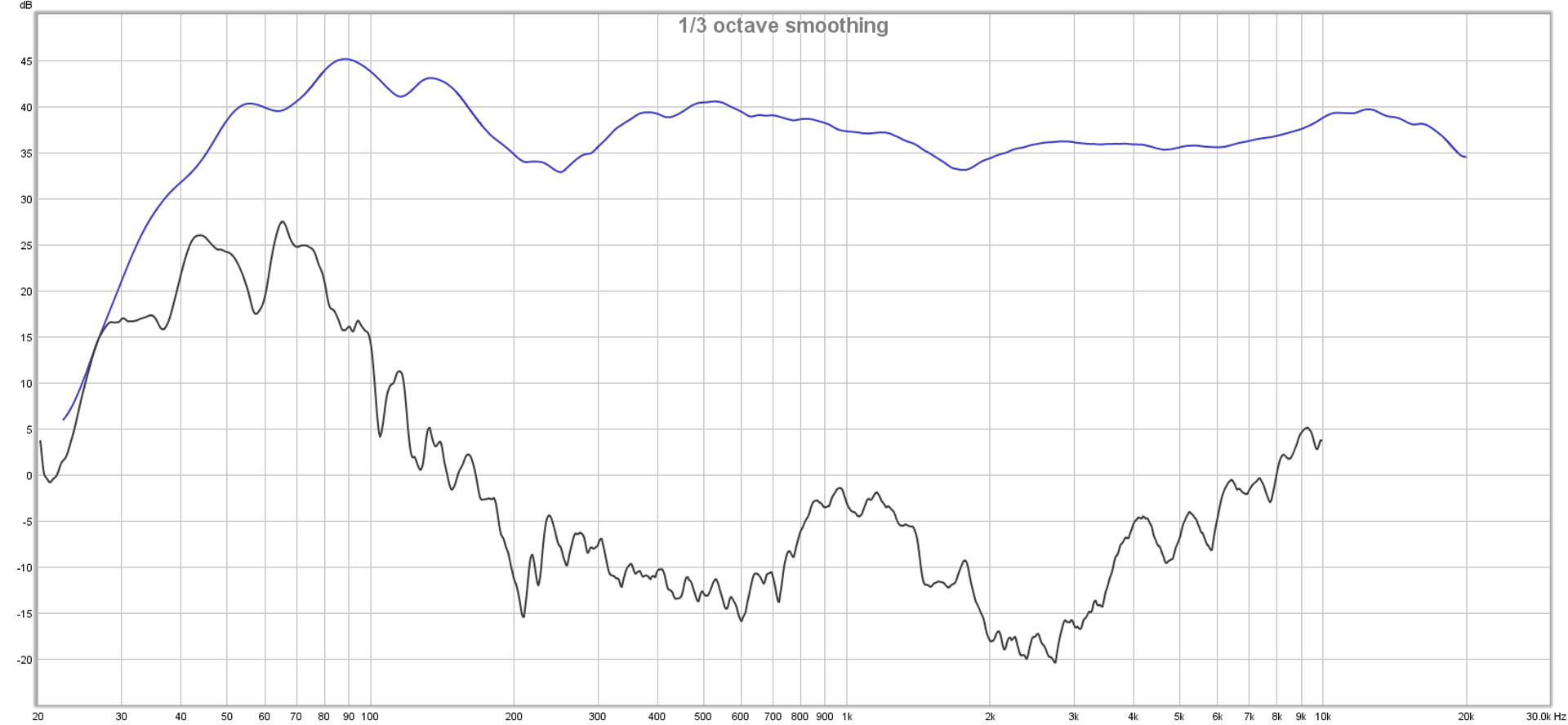

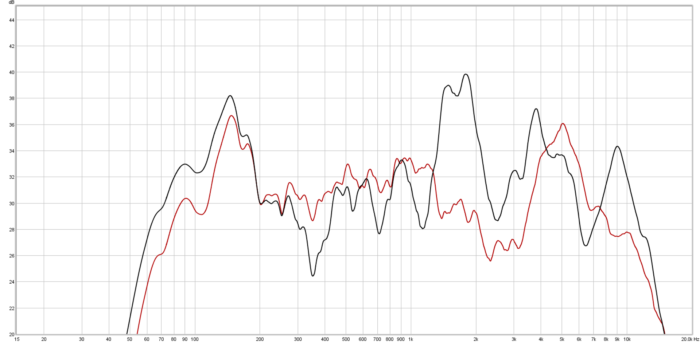

Maybe the basic system tuning solution created with [x] was just fundamentally better than what you’ve done with [y]. It’s entirely possible that you’ve gotten into a rut with the magnitude response that you tend to dial up with [y], and the other operator naturally arrives at something different. You like that something different. That something different is entirely measurable on a frequency response trace.

Maybe it wasn’t [x] at all. Maybe you were in a different room, and you liked the acoustics better. Maybe the different room has less “splatter,” or maybe it causes a low-frequency buildup that you enjoy. An RT60 measurement might reveal the difference, as might a waterfall plot, or maybe we’re right back to the basic magnitude vs. frequency trace again.

Maybe the deployment of the system was a little different, and a couple of boxes arrived and combined in a way you preferred. Maybe it’s time to look at your phase measurements…or frequency response, again, some more.

The basic human hearing input apparatus does not have capabilities which are difficult for modern technology to meet or exceed. If you’re reading this, you very probably can no longer hear the entire theoretical bandwidth that humans can handle. Measurement mics which can sense that entire bandwidth (and maybe more) can be had for less than $100 US. What can’t be easily replicated is the giant, pattern-synthesizing computer that we keep locked inside our skulls. That’s not really relevant, though, because imagination isn’t hearing. It’s imagination. Imagination can’t be measured, but real events in the world can be. What matters in audio, what we have a chance of controlling, are those real events.